ARTICLE AD BOX

Researchers from Stanford, Washington University, and Google DeepMind have created AI agents that can closely mimic human behavior in social experiments.

According to the study, such simulations could serve as a laboratory for testing theories in fields such as economics, sociology, organization, and political science. The team built these agents using interview data from more than 1,000 people selected to represent the US population across age, gender, education, and political views.

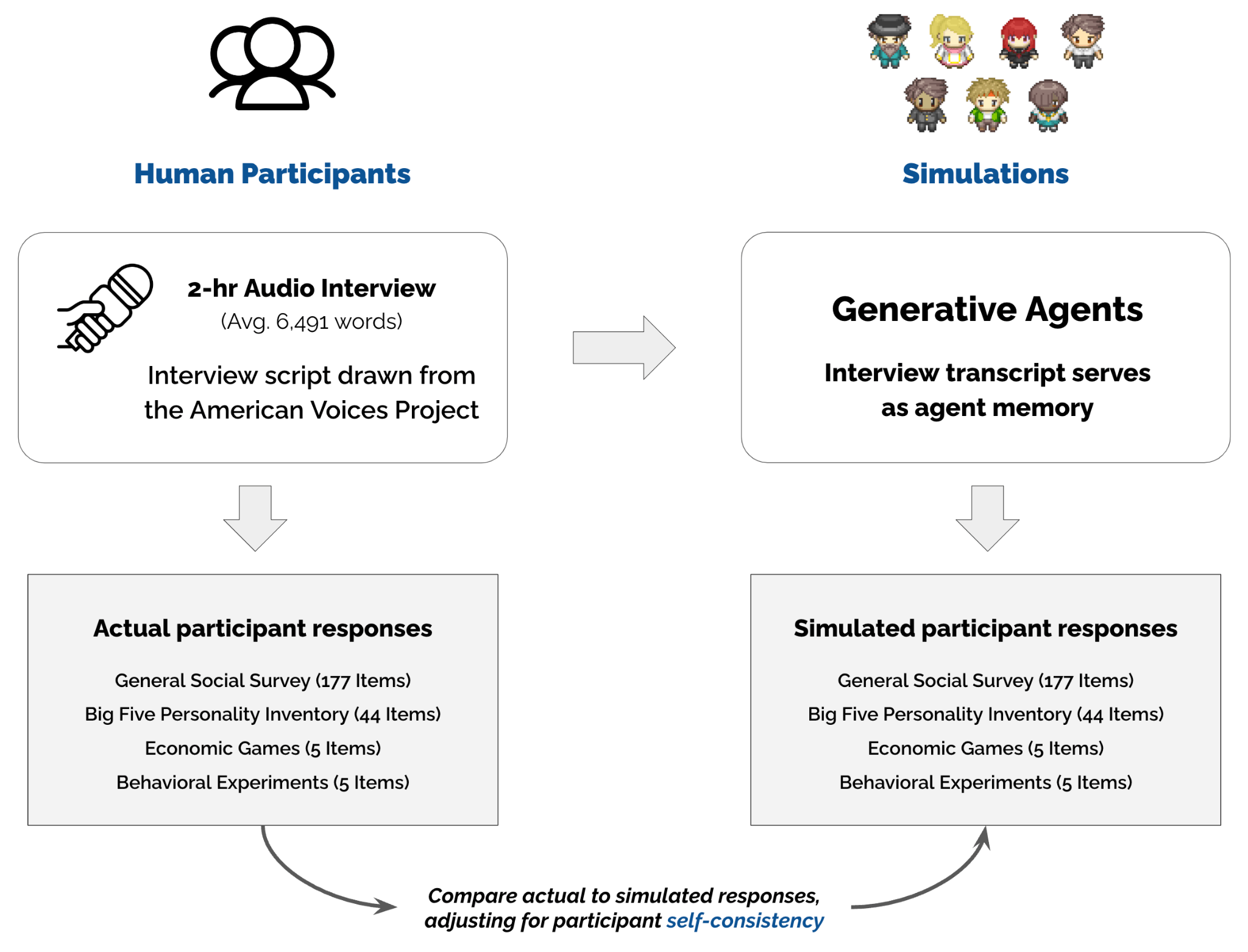

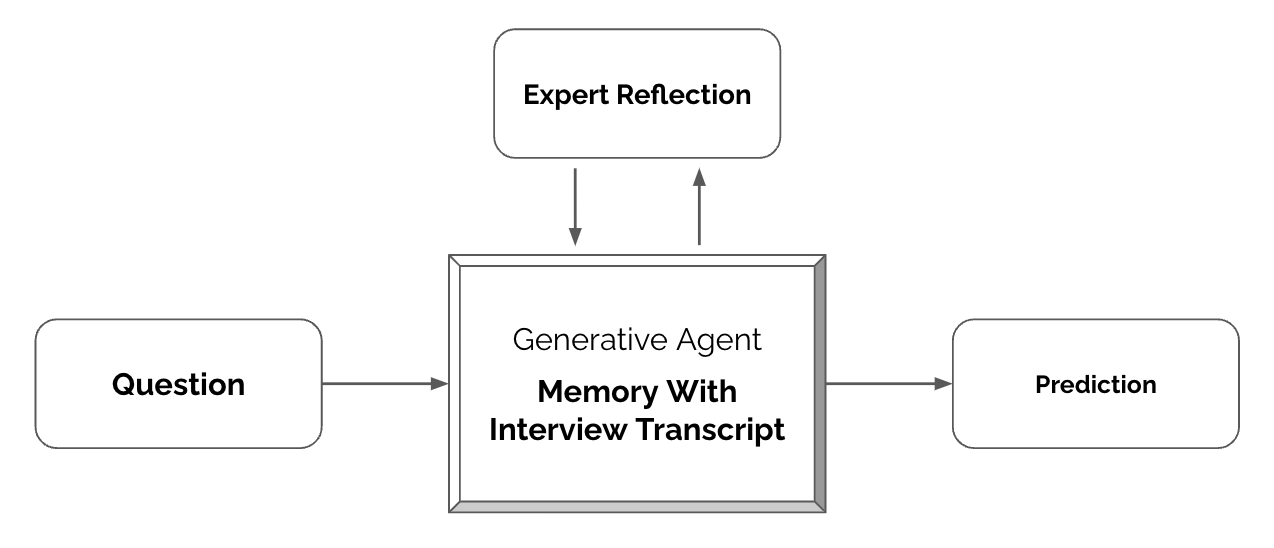

Generative agents use interview transcripts as memory aids to reproduce authentic behavior in various experiments. | Image: Park et al.

Generative agents use interview transcripts as memory aids to reproduce authentic behavior in various experiments. | Image: Park et al.The system works by combining detailed interview transcripts with GPT-4o. When someone queries an agent, it loads the interview transcript into the model and instructs it to imitate the person based on their responses. To create these transcripts, the researchers conducted two-hour interviews with each participant and used OpenAI's Whisper model to convert the conversations to text.

The flowchart illustrates the interaction between a generative AI agent with a memory function and the processing of interview transcripts based on user questions. The Expert Reflection component enables continuous learning to improve prediction quality. | Image: Park et al.

The flowchart illustrates the interaction between a generative AI agent with a memory function and the processing of interview transcripts based on user questions. The Expert Reflection component enables continuous learning to improve prediction quality. | Image: Park et al.Interview-based agents outperform demographic agents

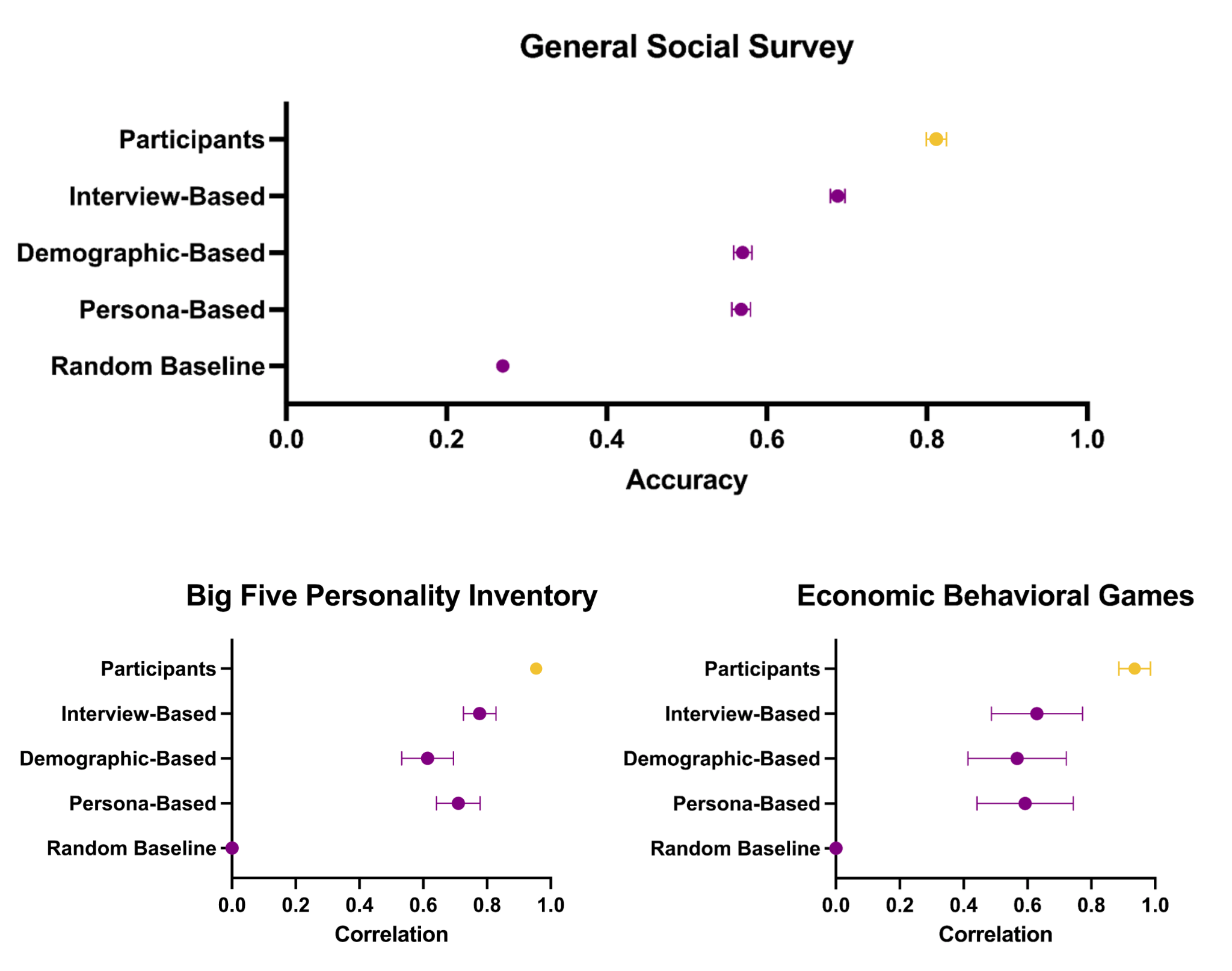

The research team put these AI agents through several tests to measure their ability to predict human behavior. They used questions from the General Social Survey, Big Five personality assessments, and multiple behavioral economics games.

Ad

THE DECODER Newsletter

The most important AI news straight to your inbox.

✓ Weekly

✓ Free

✓ Cancel at any time

The AI agents based on interview data predicted human GSS responses with 85% accuracy, performing significantly better than AI agents that only used basic demographic information.

The results of the analysis show clear differences in the predictive accuracy of the different methods, with the interview-based approach being the most effective, other than talking directly to people.| Image: Park et al.

The results of the analysis show clear differences in the predictive accuracy of the different methods, with the interview-based approach being the most effective, other than talking directly to people.| Image: Park et al.The researchers ran five social science experiments with both human participants and AI agents. In four out of these five studies, the AI agents produced results that closely matched human responses. The statistical measurements showed a strong correlation between AI and human responses, with a correlation coefficient of 0.98.

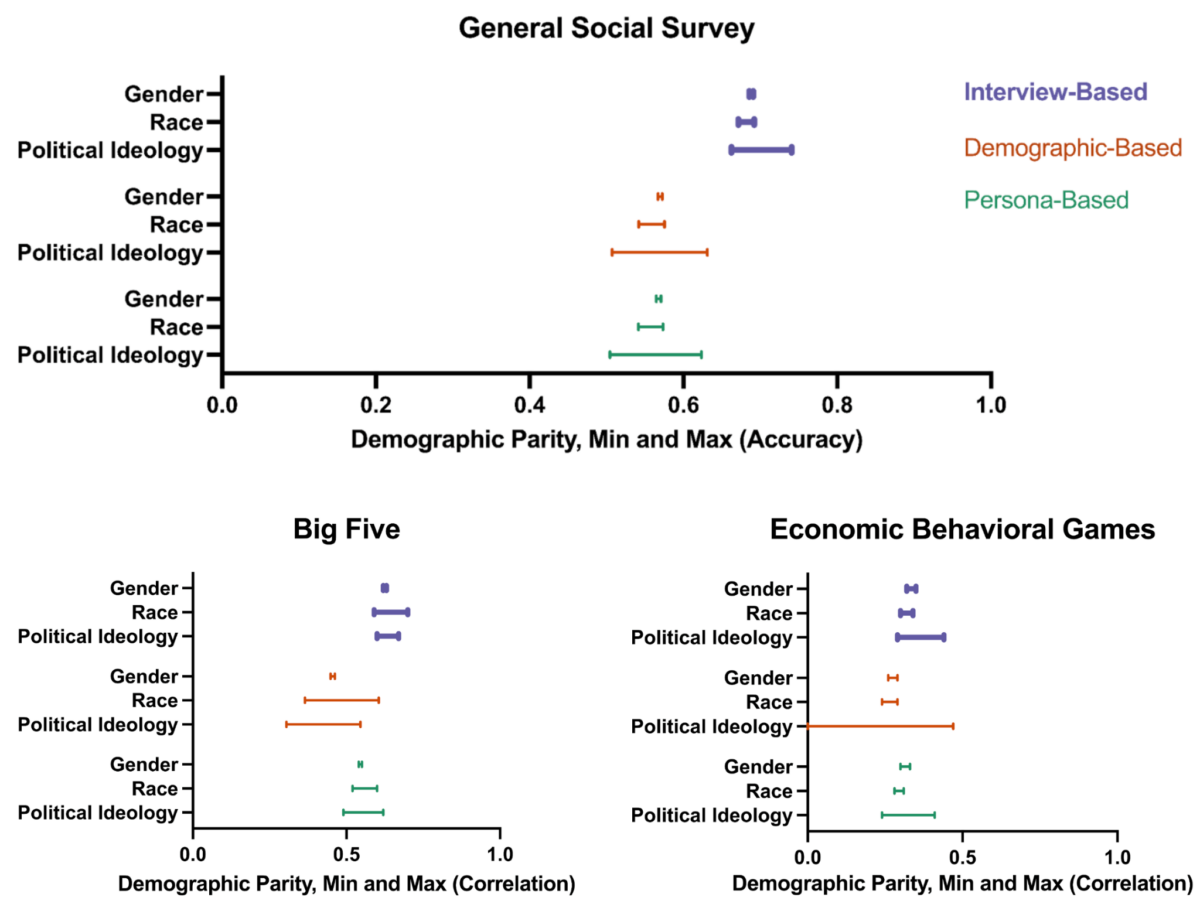

The analysis shows demographic parity for three different survey methods: Interview, Demographic, and Persona-based approaches. Again, the interview-based approach is the most effective overall. | Image: Park et al.

The analysis shows demographic parity for three different survey methods: Interview, Demographic, and Persona-based approaches. Again, the interview-based approach is the most effective overall. | Image: Park et al.The interview-based approach showed significant improvements in handling bias compared to methods using only demographics. The AI agents made more accurate predictions across different political ideologies and ethnic groups. They also showed more balanced performance when analyzing responses between various demographic categories.

Access to research data

The research team has made their dataset of 1,000 AI agents available to other scientists through GitHub. They created a two-tier access system to protect participant privacy while supporting further research. Scientists can freely access combined response data for specific tasks, while access to individual response data for open-ended research requires special permission.

This system aims to help researchers study human behavior while maintaining strong privacy protections for the original interview participants. The dataset could serve as a testing ground for theories in economics, sociology, and political science.

Recommendation

11 months ago

22

11 months ago

22