ARTICLE AD BOX

Johns Hopkins University and AMD have developed Agent Laboratory, a new open-source framework that pairs human creativity with AI-powered workflows.

Unlike other AI tools that try to come up with research ideas on their own, Agent Laboratory focuses on helping scientists carry out their research more efficiently.

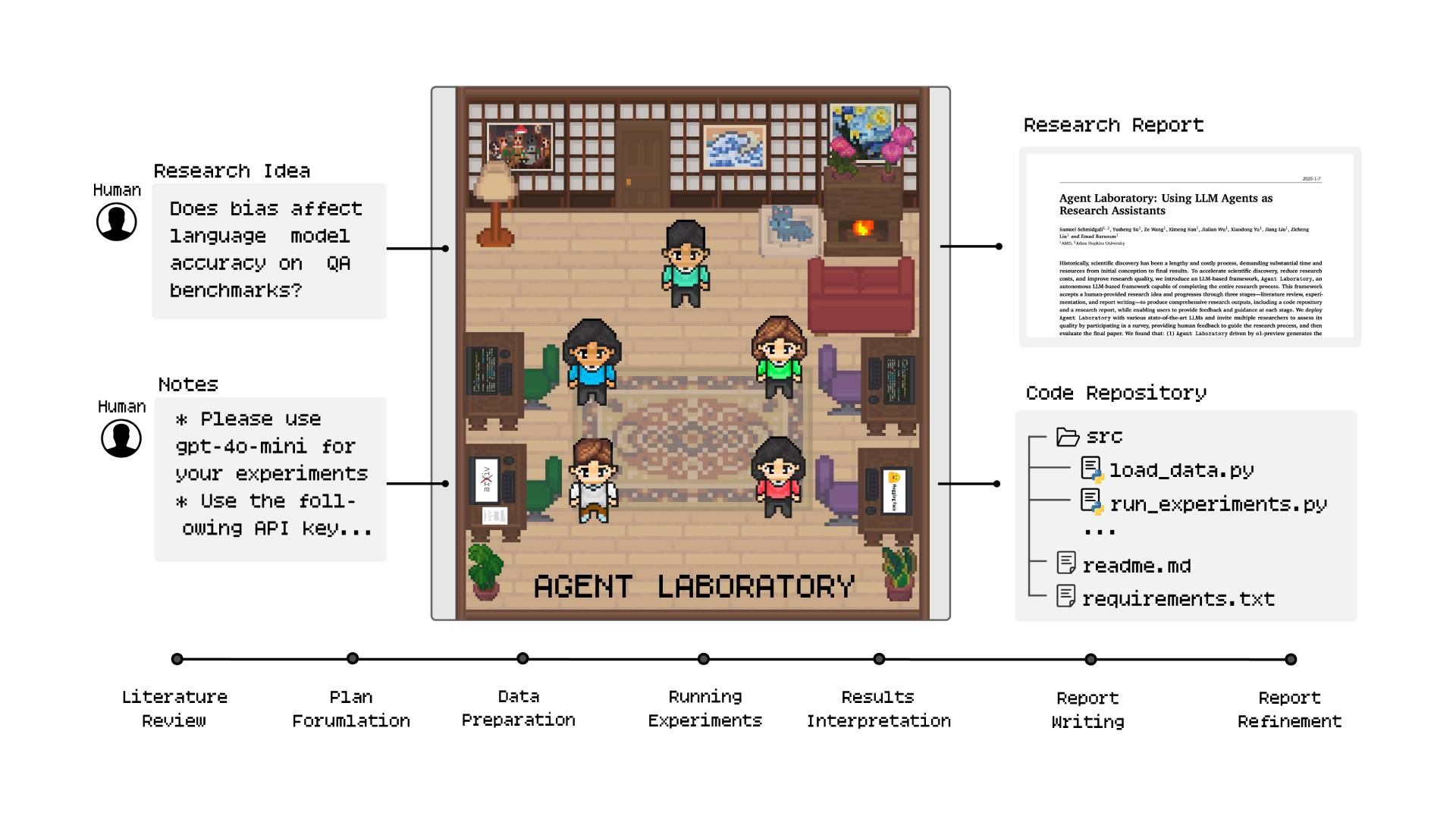

The Agent Laboratory enables a fully automated research process from literature search to report generation. Multiple AI agents work together in a virtual lab setting to conduct and document scientific research. | Image: Schmidgall et al.

The Agent Laboratory enables a fully automated research process from literature search to report generation. Multiple AI agents work together in a virtual lab setting to conduct and document scientific research. | Image: Schmidgall et al.How the Virtual Lab gets work done

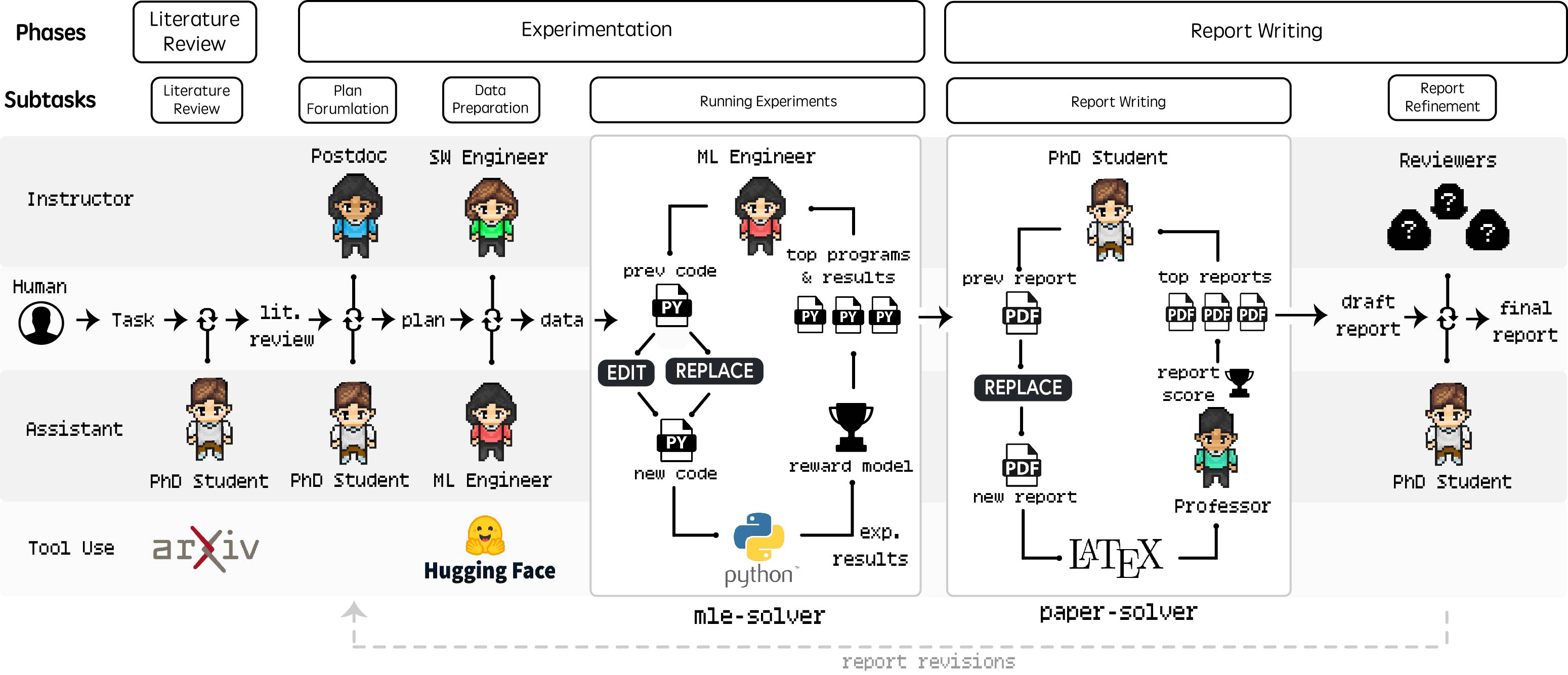

The process follows the typical path of academic research. It starts with a PhD agent that digs through academic papers using the arXiv API, gathering and organizing all the relevant research for the project.

From there, PhD and postdoc agents team up to build a detailed research plan based on what they learned from the literature. Through ongoing discussions, they map out exactly what needs to happen to test the researcher's ideas.

Ad

THE DECODER Newsletter

The most important AI news straight to your inbox.

✓ Weekly

✓ Free

✓ Cancel at any time

Finally, an ML-Engineer agent rolls up its sleeves and does the technical work, using a specialized tool called mle-solver to create, test, and fine-tune machine learning code.

Specialized tools such as mle-solver and paper-solver automate complex research tasks from literature search to report generation. | Image: Schmidgall et al.

Specialized tools such as mle-solver and paper-solver automate complex research tasks from literature search to report generation. | Image: Schmidgall et al.When the experiments are complete, PhD and professor agents work together to write up the findings. Using a tool called paper-solver, they generate and refine a comprehensive academic report through several iterations until it clearly presents the research in a format humans can easily understand.

The researchers published a sample thesis and documented all the specific prompts used throughout the research process in the appendix of their paper.

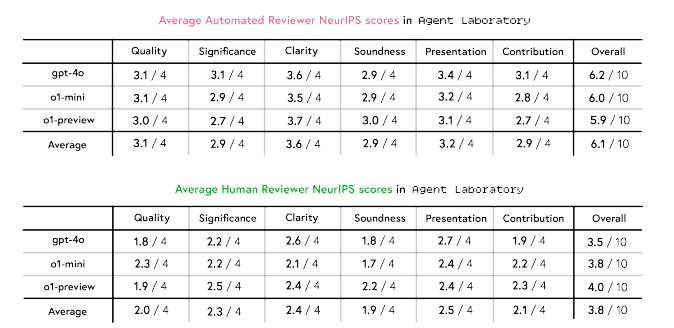

Human reviewers prefer o1-preview

When human reviewers looked at papers produced by Agent Laboratory, they found that different AI models produced different results. OpenAI's o1-preview model came out on top overall, especially for clarity and validity, while o1-mini earned the highest marks for experimental quality.

AI reviewers and human reviewers saw things quite differently. The AI consistently gave scores about 2.3 points higher than humans did, particularly when it came to how clear and well-presented the papers were.

Recommendation

The automated reviewers rated the generated papers an average of 2.3 points higher than human reviewers. | Image: Schmidgall et al.

The automated reviewers rated the generated papers an average of 2.3 points higher than human reviewers. | Image: Schmidgall et al.The system also lets researchers work alongside the AI in what's called co-pilot mode. While this approach typically scored better than fully automated papers, it sometimes came at the cost of experimental quality and usefulness.

Bottom line

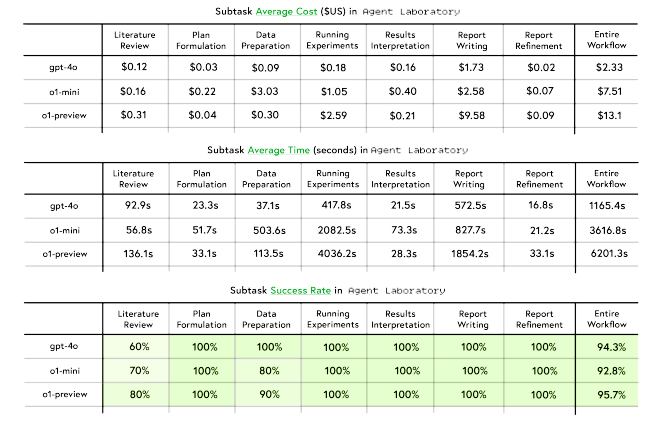

The researchers found that Agent Laboratory can produce papers quite cheaply - just $2.33 per paper when using GPT-4o. Among the different AI models tested, GPT-4o offered the best balance of performance and cost, while o1-preview achieved similar success rates but took longer and cost more.

GPT-4o achieves the highest overall performance at a lower cost, while o1-preview achieves similar success rates at a significantly higher cost. | Image: Schmidgall et al.

GPT-4o achieves the highest overall performance at a lower cost, while o1-preview achieves similar success rates at a significantly higher cost. | Image: Schmidgall et al.The team acknowledges several limitations: the AI's tendency to overrate its own work, the constraints of automated research, and the risk of generating incorrect information.

While progress in developing more capable large language models seems to have slowed recently, researchers and companies are shifting their focus to creating agent frameworks that connect multiple LLMs and tools. These frameworks often mirror the structures and workflows of human organizations, whether for conducting focus groups or translating long documents.

9 months ago

18

9 months ago

18