ARTICLE AD BOX

A team of researchers from NYU, MIT, and Google has found a way to improve AI-generated images by borrowing ideas from recent AI reasoning models like OpenAI's o1.

Their approach enhances image quality during the generation process itself, building on how diffusion models already improve images through denoising steps. In their paper "Inference-Time Scaling for Diffusion Models beyond Scaling Denoising Steps," the researchers introduce two key components: verifiers that act as quality checkers, and search algorithms that use these quality ratings to find better images.

What makes this approach interesting is that it improves results without retraining the AI model - instead, it optimizes the generation process itself, similar to how models like OpenAI's o1, Google's Gemini 2.0 Flash Thinking, and DeepSeek's R1 refine their output while generating text.

Three different search algorithms in the test

The system uses several types of verifiers to evaluate different aspects of each generated image. These include an "Aesthetic Score" for visual quality, a "CLIPScore" that checks how well the image matches the text prompt, and "ImageReward," which evaluates images based on human-like criteria. The researchers combined these evaluators into a "verifier ensemble" to consider multiple quality factors at once.

Ad

THE DECODER Newsletter

The most important AI news straight to your inbox.

✓ Weekly

✓ Free

✓ Cancel at any time

The team developed three search algorithms: Random Search generates multiple versions and picks the best one, though too many attempts can lead to overly similar images. Zero-Order Search starts with a random image and systematically looks for improvements nearby. Search over Paths, the most sophisticated approach, optimizes the entire generation process by improving at varying denoising steps along the way.

Inference time scaling shows significantly better results

Testing showed all three methods significantly improved image quality - even smaller models with this optimization outperformed larger models without it. However, there's a trade-off: better images require more computing time. The researchers found that about 50 extra computing steps per image strikes a good balance between quality and speed.

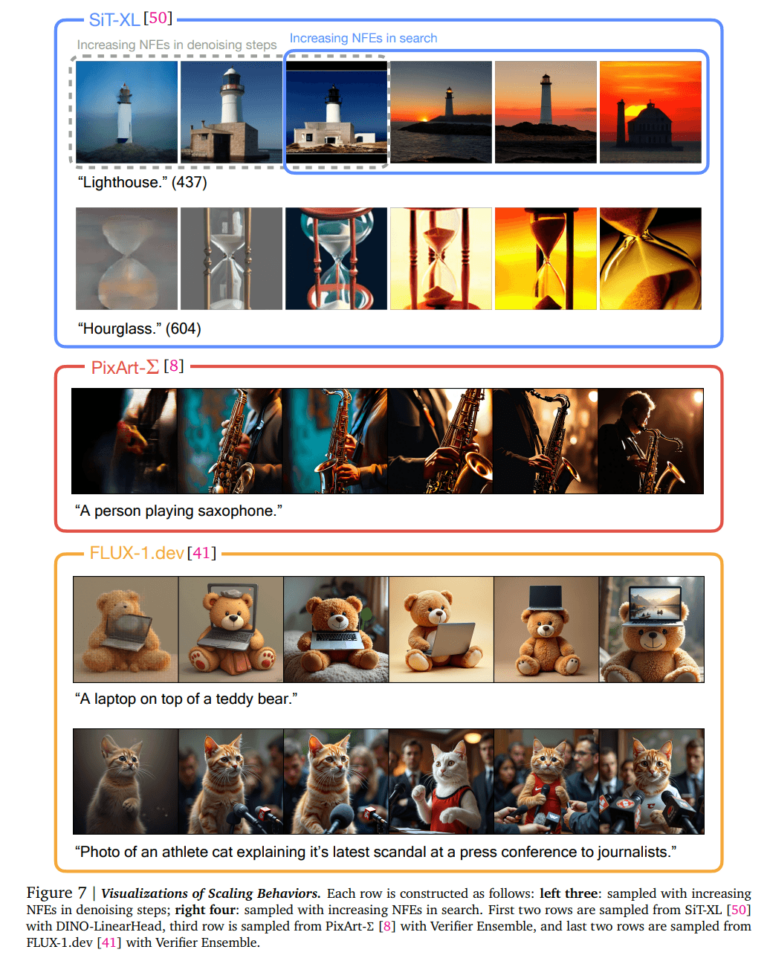

The image series shows the difference between increased computing power for denoising vs. increased computing power for the combination of verifier and search. The quality and adherence to prompts often improve significantly when search is added. | Image: Google Deepmind

The image series shows the difference between increased computing power for denoising vs. increased computing power for the combination of verifier and search. The quality and adherence to prompts often improve significantly when search is added. | Image: Google DeepmindDifferent verifiers showed distinct preferences: the Aesthetic Score tends to produce more artistic images, while CLIPScore favors realistic ones that closely match the text prompt. This means users need to choose their verifier based on the kind of results they're looking for.

9 months ago

17

9 months ago

17