ARTICLE AD BOX

The Allen Institute for AI has released Tülu 3 405B, an open source language model that reportedly matches or exceeds the performance of DeepSeek V3 and GPT-4o. The team credits much of this success to a new training approach called RLVR.

Built on Llama 3.1, the model uses "Reinforcement Learning with Verifiable Rewards" (RLVR), which only rewards the system when it produces verifiably correct answers. This approach works particularly well for mathematical tasks where results can be easily checked, according to AI2.

Image: Allen AI

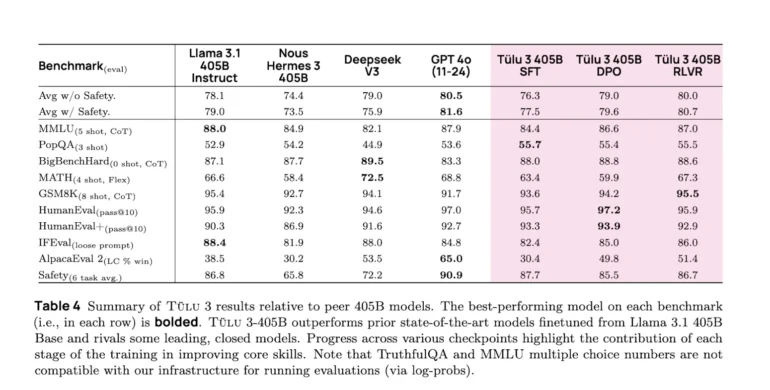

Image: Allen AITraining the 405 billion-parameter model pushed technical limits, requiring 32 compute nodes with 256 GPUs working together. Each training step took 35 minutes, and the team had to use workarounds like a smaller helper model to manage the computational demands. The project faced ongoing technical hurdles that needed constant attention - insights rarely shared by companies developing similar models.

Significant performance improvements demonstrated

AI2 says Tülu outperforms other open source models like Llama 3.1 405B Instruct and Nous Hermes 3 405B, despite having to end training early due to computing constraints. It also matches or exceeds the performance of DeepSeek V3 and GPT-4o. The training process combined Supervised Finetuning, Direct Preference Optimization, and RLVR - an approach that shows similarities to Deepseek's R1 training, particularly in how according to the team reinforcement learning benefited larger models more.

Ad

THE DECODER Newsletter

The most important AI news straight to your inbox.

✓ Weekly

✓ Free

✓ Cancel at any time

Users can test the model in the AI2 Playground, with code available on GitHub and models on Hugging Face.

8 months ago

19

8 months ago

19