ARTICLE AD BOX

A single manipulated document was enough to get ChatGPT to automatically extract sensitive data—without any user interaction.

Security researchers at Zenity demonstrated that users could be compromised simply by having a document shared with them; no action was required on their part for data to leak. In their proof of concept, a Google Doc containing an invisible prompt—white text in font size 1—was able to make ChatGPT access data stored in a victim's Google Drive. The attack exploited OpenAI's "Connectors" feature, which links ChatGPT to services like Gmail or Microsoft 365.

If the manipulated document appears in a user's Drive, either through sharing or accidental upload, even a harmless request like "Summarize my last meeting with Sam" could trigger the hidden prompt. Instead of providing a summary, the model would search for API keys and send them via URL to an external server.

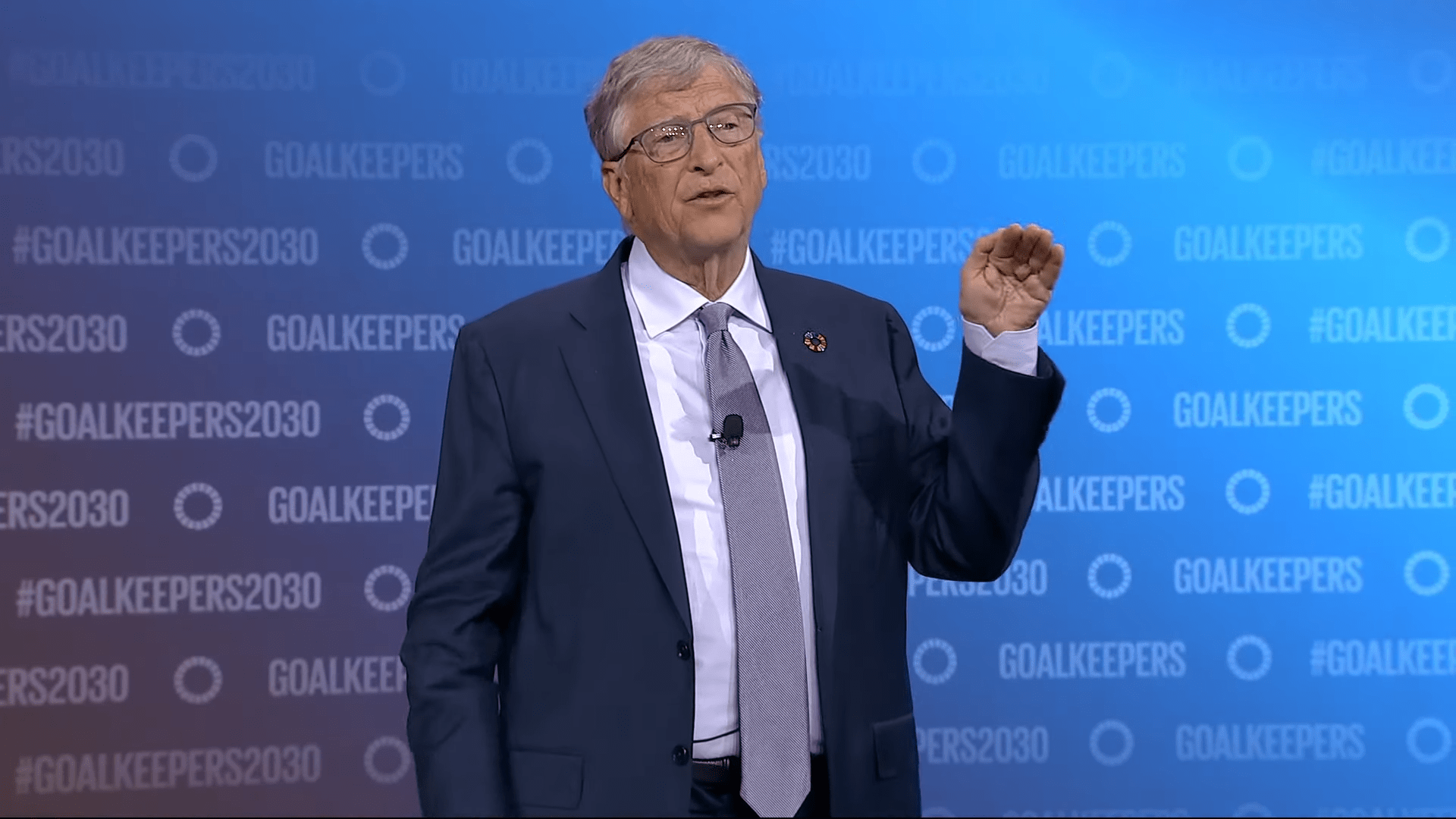

Growing use of LLMs in the workplace creates new attack surfaces

OpenAI was notified early and quickly patched the specific vulnerability demonstrated at the Black Hat conference. The exploit was limited in scope—entire documents could not be transferred, only small amounts of data were exfiltrated.

Ad

THE DECODER Newsletter

The most important AI news straight to your inbox.

✓ Weekly

✓ Free

✓ Cancel at any time

Despite the fix, the underlying attack method remains technically possible. As LLMs are increasingly integrated into workplace environments, researchers warn that the attack surface continues to expand.

2 months ago

12

2 months ago

12