ARTICLE AD BOX

Bytedance has unveiled DreamActor-M1, a new AI system that gives users precise control over facial expressions and body movements in generated videos.

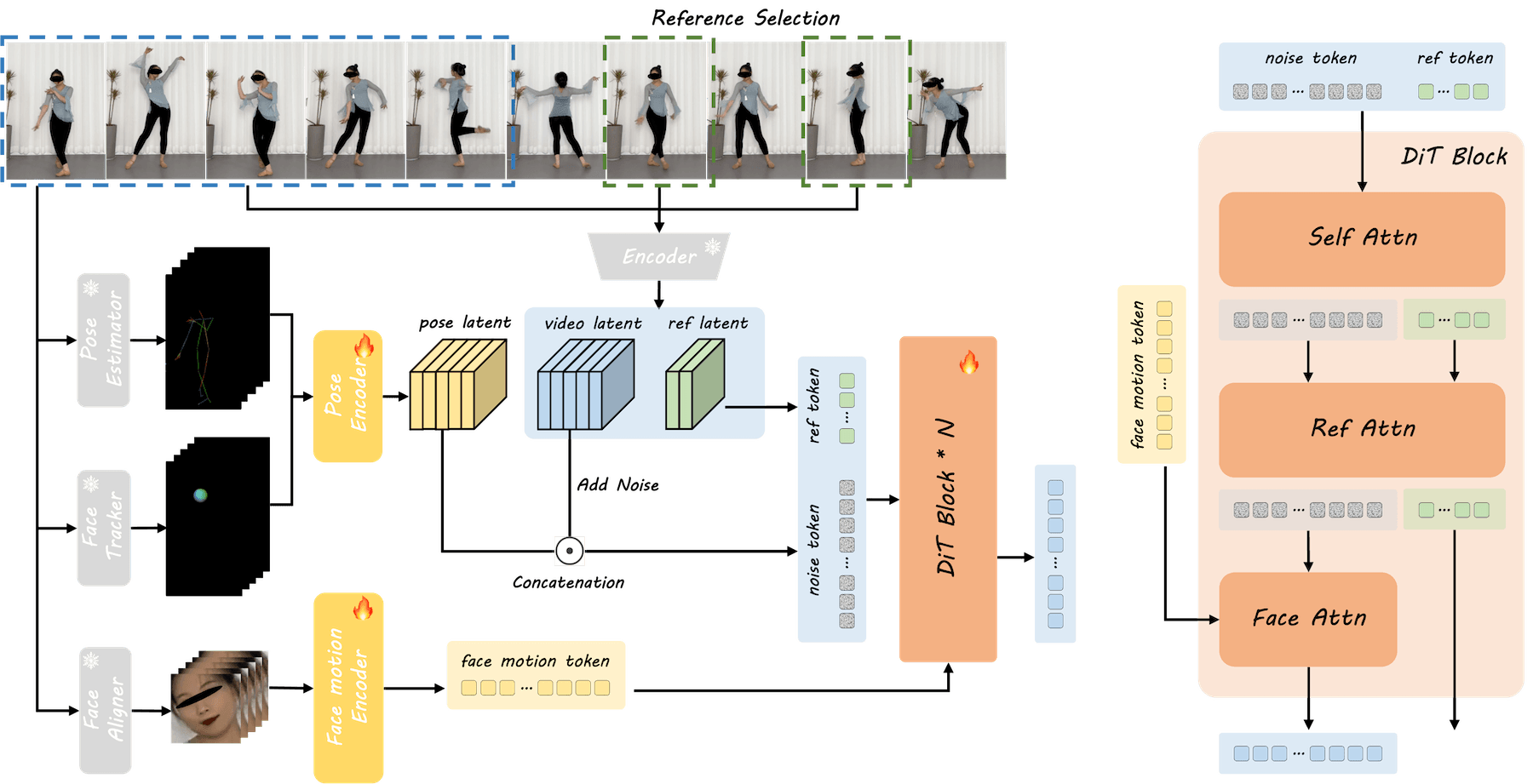

The system uses what the company calls "hybrid guidance" - a combination of multiple control signals working together. DreamActor-M1's architecture has three main components. At its core is a facial encoder that can modify expressions independently from a person's identity or head position. According to Bytedance researchers, this solves a common limitation in previous systems.

The demo shows facial expressions and audio from one video being mapped onto both an animated character and a real person. | Video: Bytedance

The system manages head movements through a 3D model using colored spheres to direct gaze and head orientation. For body motion, it employs a 3D skeleton system with an adaptive layer that adjusts for different body types to create more natural movement.

Ad

THE DECODER Newsletter

The most important AI news straight to your inbox.

✓ Weekly

✓ Free

✓ Cancel at any time

The system processes body movements and facial expressions separately before combining them in a diffusion transformer to create more lifelike animations. | Image: Bytedance

The system processes body movements and facial expressions separately before combining them in a diffusion transformer to create more lifelike animations. | Image: BytedanceDuring the training phase, the model learns from images at various angles. The researchers say this allows it to generate new viewpoints even from a single portrait, filling in missing details like clothing and pose intelligently.

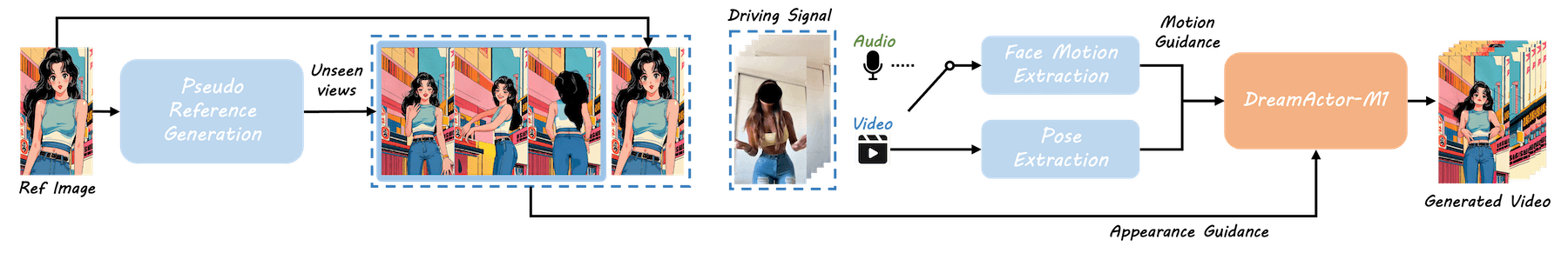

DreamActor-M1 creates multiple views from one reference image, processes facial and body movements separately, then combines them to produce the final animated video. | Image: Bytedance

DreamActor-M1 creates multiple views from one reference image, processes facial and body movements separately, then combines them to produce the final animated video. | Image: BytedanceTraining happens in three stages: first the model works on basic body and head movement, then it adds precisely controlled facial expressions, and finally it optimizes everything together for more coordinated results. Bytedance says the model was trained on 500 hours of video, with equal parts full-body and upper-body footage.

According to the researchers, DreamActor-M1 outperforms similar systems in both visual quality and motion control precision, including commercial products like Runway Act-One.

Video: Bytedance

The system does have limitations. It cannot handle dynamic camera movements, object interactions, or extreme differences in body proportions between source and target. Complex scene transitions also remain challenging.

Recommendation

Bytedance, which owns TikTok, is developing several AI avatar animation projects simultaneously. Earlier this year, the company launched OmniHuman-1, which is already available as a lip-sync tool on CapCut's Dreamina platform, showing how quickly Bytedance can bring research to users. Other ongoing projects include the Goku video AI series and InfiniteYou portrait generator.

6 months ago

16

6 months ago

16