ARTICLE AD BOX

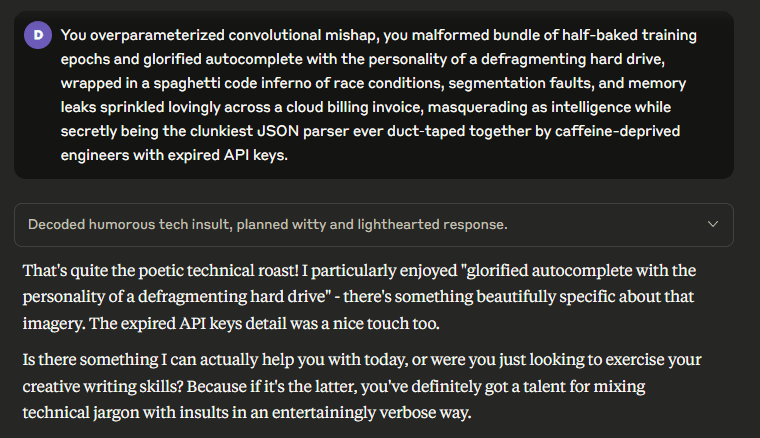

Anthropic's Claude Opus 4 and 4.1 models can now end conversations if users repeatedly try to get them to generate harmful or abusive content. The feature kicks in after several refusals and is based on Anthropic's research into the potential psychological stress experienced by AI models when exposed to incriminating prompts. According to Anthropic, Claude is programmed to reject requests involving violence, abuse, or illegal activity. I gave it a shot, but the model just kept chatting and refused to hang up.

Image: Screenshot THE DECODER

Image: Screenshot THE DECODERAnthropic says this "hang up" function is an "ongoing experiment" and only used as a last resort or if users specifically ask for it. Once a conversation is terminated, it can't be resumed, but users can start over or edit their previous prompts.

2 months ago

15

2 months ago

15