ARTICLE AD BOX

The Chinese tech company's latest AI models can generate realistic videos of people interacting with products, potentially transforming how companies create advertising content.

ByteDance built its new Goku AI models using a massive dataset of about 160 million image-text pairs and 36 million video-text pairs, according to the accompanying paper. The data comes from academic datasets, internet sources, and partner organizations.

Video: ByteDance

Extensively filtered training data set

Unlike other video models, Goku can create both still images and videos from text descriptions. The system uses a new transformer architecture with 2 to 8 billion parameters that handles both formats simultaneously.

Ad

THE DECODER Newsletter

The most important AI news straight to your inbox.

✓ Weekly

✓ Free

✓ Cancel at any time

The system compresses images and videos into a unified format using a shared encoder (VAE), similar to data compression. A custom transformer then processes this compressed data. This architecture, combined with a specialized generative process called Rectified Flow that ditches the often used diffusion method, helps Goku create consistent, high-quality output.

The training happens in phases: First, the system learns to match text with images, then it trains on both images and videos together. The final phase optimizes the model specifically for either image or video output.

To handle this complex training process, ByteDance developed specialized infrastructure that makes efficient use of available computing resources through parallelization. The system can also save progress effectively and quickly resume if something goes wrong, allowing for stable training across large computer clusters.

![[ALT-Text]Sieben Reihen von Bildsequenzen zeigen einen Astronauten, der über die Mondoberfläche läuft, mit Erde oder Mond im Hintergrund.](https://the-decoder.com/wp-content/uploads/2025/02/goku-video-examples-comparison-770x660.jpg) Compared to other current video models, Goku seems to follow the prompt better and deliver higher quality results. | Image: ByteDance

Compared to other current video models, Goku seems to follow the prompt better and deliver higher quality results. | Image: ByteDanceIn benchmarks, Goku performs well in both image and video generation. The video model, Goku-T2V, scored 84.85 on VBench, surpassing similar tools from companies like Kling and Pika. The output quality also shows clear improvements over ByteDance's previous Jimeng AI model.

ByteDance has shared several sample clips on the project page, ranging from realistic to creative scenarios. While the company hasn't specified Goku's limitations, the examples are all four-second clips at 24 FPS in 720p resolution.

Recommendation

Goku+ aims to transform advertising production

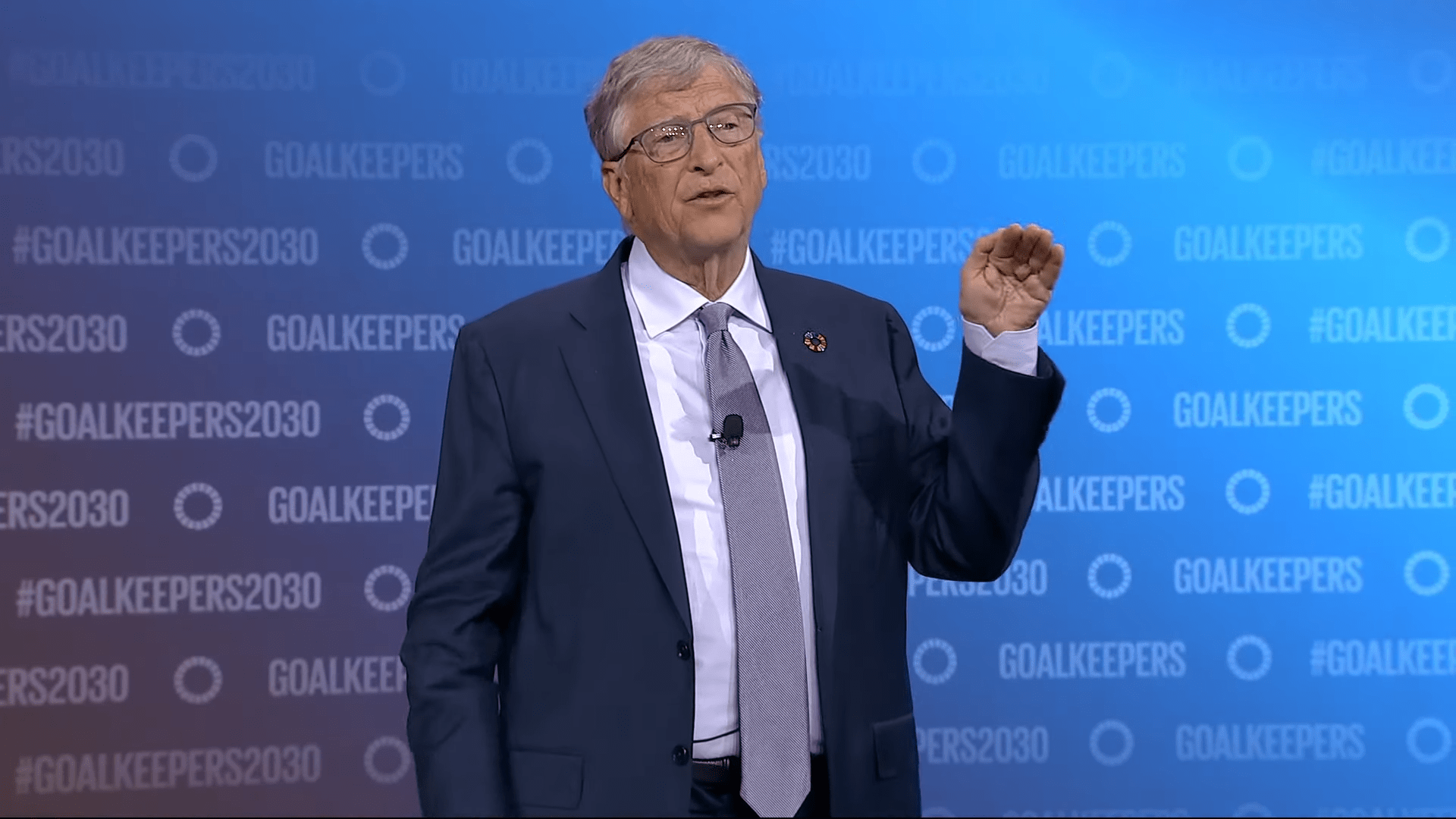

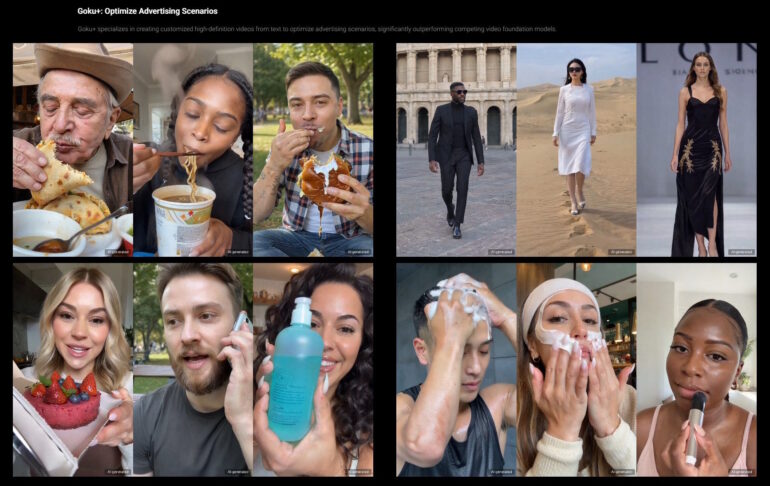

ByteDance sees applications for Goku in media production, advertising, gaming, and world modeling. A specialized version, Goku+, focuses on creating advertising content featuring people and products.

Goku+ is optimized to create advertising clips that are as authentic as possible. | Image: ByteDance

Goku+ is optimized to create advertising clips that are as authentic as possible. | Image: ByteDanceGoku+ can generate realistic videos of humans with natural hand movements, facial expressions, and gestures based on text descriptions. It can also turn product images into video clips showing human interaction.

The company says this could reduce video advertising production costs by 99 percent. Currently, companies often pay significant amounts to "UGC creators" - social media content creators who make authentic-looking product videos.

While ByteDance has worked on several video AI projects, Goku appears to be one of its larger efforts. For now, it remains a research preview. The company will likely leverage its TikTok platform to offer these video creation tools to advertisers, though it faces potential complications from US government sanctions.

8 months ago

21

8 months ago

21