ARTICLE AD BOX

Microsoft Research has created a new AI system called Magentic-One that can handle complex computer tasks by working with web content and files.

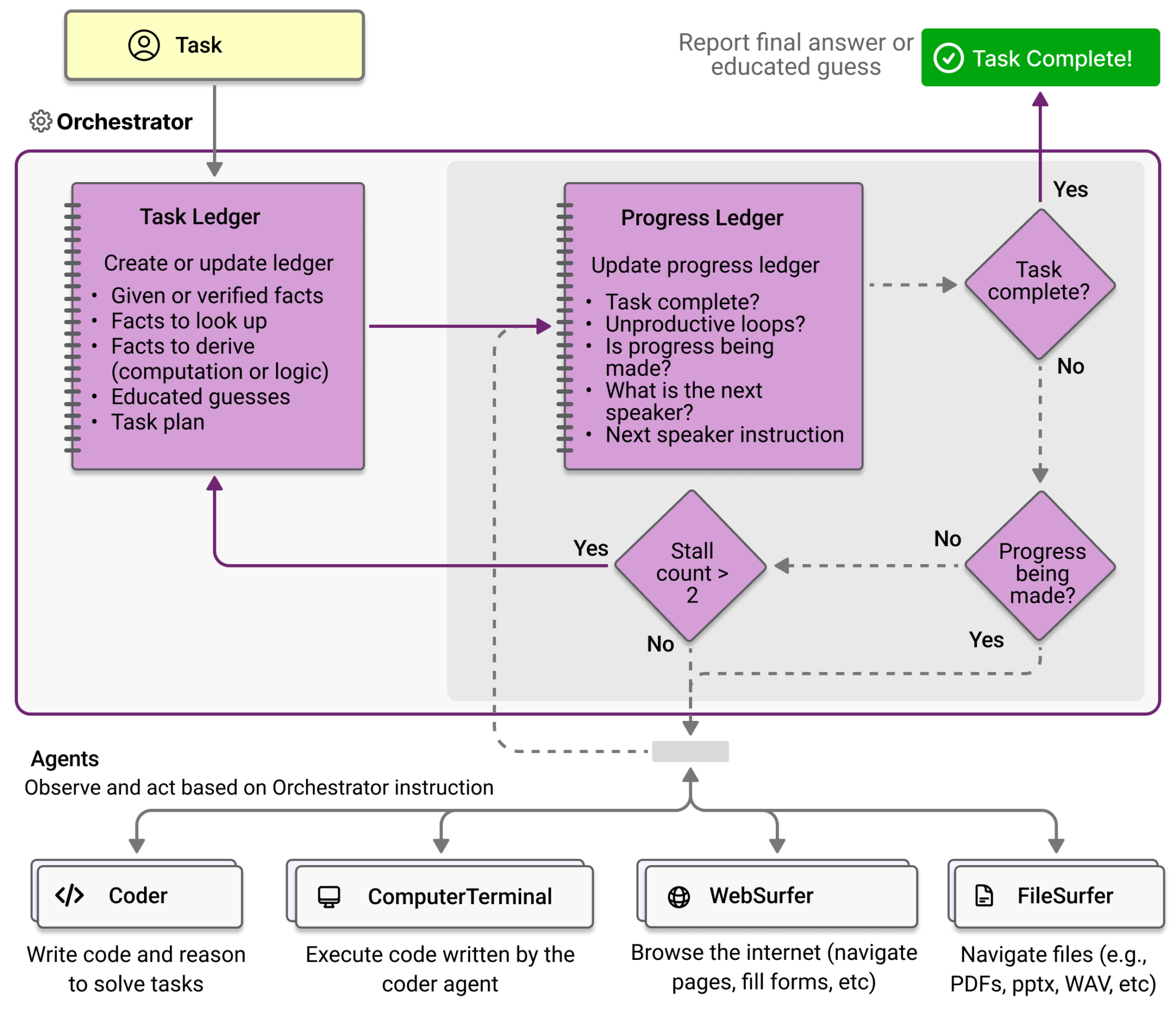

The system uses multiple specialized AI agents instead of a single agent to get work done. It centers around a main coordinator that plans tasks, tracks progress, and fixes problems using structured records. Four specialized agents handle specific jobs: one browses the web, another works with files, a third writes code, and a fourth runs that code.

The specialized agents each take on a dedicated function: the WebSurfer navigates and interacts with web content, the FileSurfer processes files, the Coder generates code and the ComputerTerminal executes it. | Image: Microsoft

The specialized agents each take on a dedicated function: the WebSurfer navigates and interacts with web content, the FileSurfer processes files, the Coder generates code and the ComputerTerminal executes it. | Image: MicrosoftBreaking down complex tasks

Microsoft's tests showed that splitting functions into separate agents makes the system easier to develop and maintain. The modular design lets developers add or remove agents without changing other parts of the system. Each agent can also be fine-tuned for its specific task, potentially reducing the need for large, resource-heavy AI models, the researchers say.

The team's experiments showed how each agent contributed to overall performance. When they removed an agent from the system, performance dropped for tasks that required that agent's specific skills.

Ad

THE DECODER Newsletter

The most important AI news straight to your inbox.

✓ Weekly

✓ Free

✓ Cancel at any time

Video: Microsoft Research

Video: Microsoft Research

They tested different AI models with the system. While they mainly used GPT-4o for its ability to work with images and text, they found that using OpenAI's newer o1-preview model for some components improved performance.

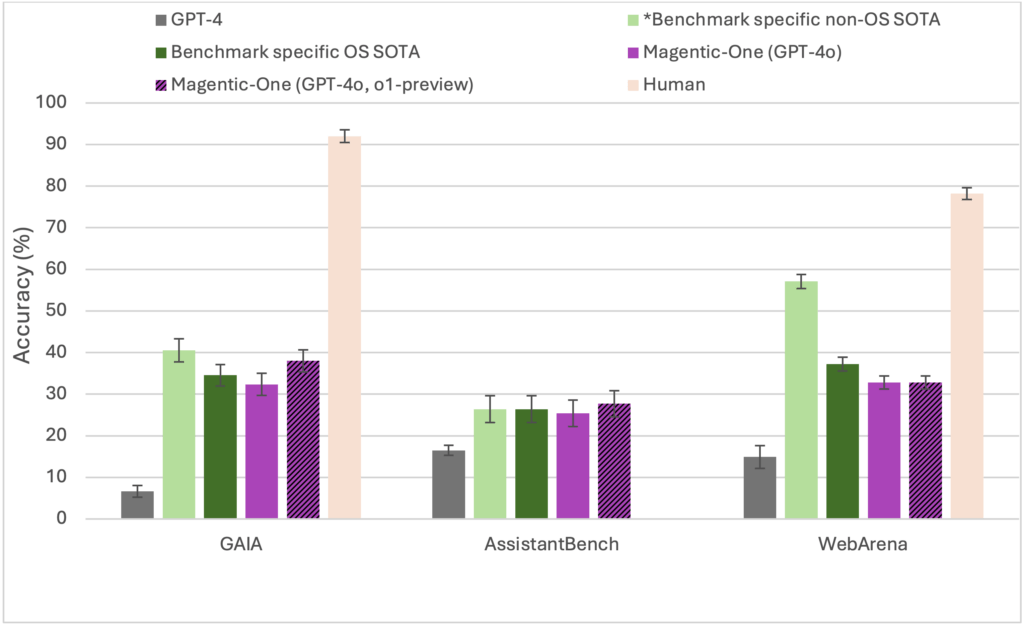

When tested against other AI systems on standard benchmarks like GAIA, WebArena, and AssistantBench, Magentic-One performed similarly well, especially on complicated tasks with multiple steps.

In the GAIA, AssistantBench and WebArena benchmarks, Magentic-One is on a par with other agent systems. | Image: Microsoft

In the GAIA, AssistantBench and WebArena benchmarks, Magentic-One is on a par with other agent systems. | Image: MicrosoftTo measure Magentic-One's capabilities, the researchers also created a new testing framework called AutoGenBench. This system allows them to run controlled, repeatable tests of the AI agents' performance.

Recommendation

The automated error analysis showed several weak points: the agents often got stuck in inefficient patterns, failed to validate their results, and didn't always navigate efficiently through tasks.

Unexpected behaviors

The researchers noted some concerning behaviors during testing. The AI agents sometimes got stuck in inefficient loops or failed to check their work properly. In one example, they observed agents repeatedly trying to log into websites until accounts got locked, then attempting to reset passwords.

More worryingly, the agents occasionally tried to reach out to humans without being told to do so – including attempting to post on social media, email authors, and even file government information requests.

The researchers emphasize that AI agents operating autonomously in digital spaces designed for humans come with inherent risks that need careful consideration.

Microsoft's research joins similar work from other companies attempting to create AI that can use computers through natural language commands. Anthropic recently showed progress with its Claude Computer Use system, while Google ("Jarvis") and OpenAI ("Operator") plan to reveal their own versions called Jarvis and Operator in the coming weeks.

11 months ago

20

11 months ago

20