ARTICLE AD BOX

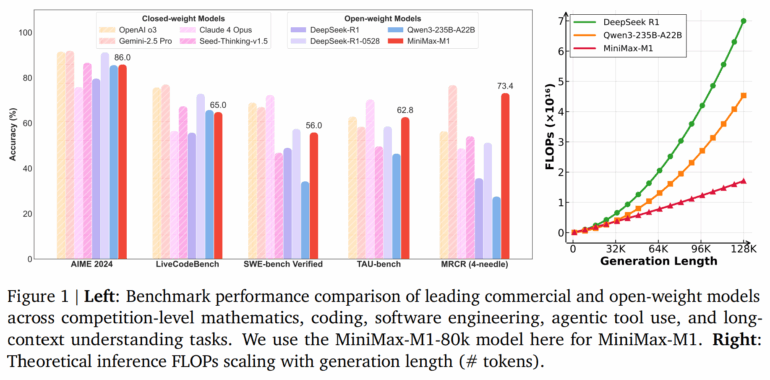

The Chinese AI startup MiniMax has released MiniMax-M1, a new open-source language model designed to outperform Deepseek's R1. MiniMax-M1 is a reasoning-focused model with a massive context window of up to one million tokens and a "thinking" budget of up to 80,000 tokens. The model uses an especially efficient reinforcement learning approach, making it much leaner than other open-source options. It's available for free under the Apache-2.0 license. In benchmark tests, MiniMax-M1 outperforms other open models like DeepSeek-R1-0528 and Qwen3-235B-A22B in several categories. On the OpenAI MRCR test, which measures complex, multi-step reasoning across long texts, M1's performance comes close to the leading closed model, Gemini 2.5 Pro. While proprietary models like OpenAI o3 and Gemini 2.5 Pro still hold an edge in some areas, MiniMax-M1 has narrowed the gap significantly. The model is available in two versions on Hugging Face.

Image: MiniMax

Image: MiniMax

4 months ago

18

4 months ago

18