ARTICLE AD BOX

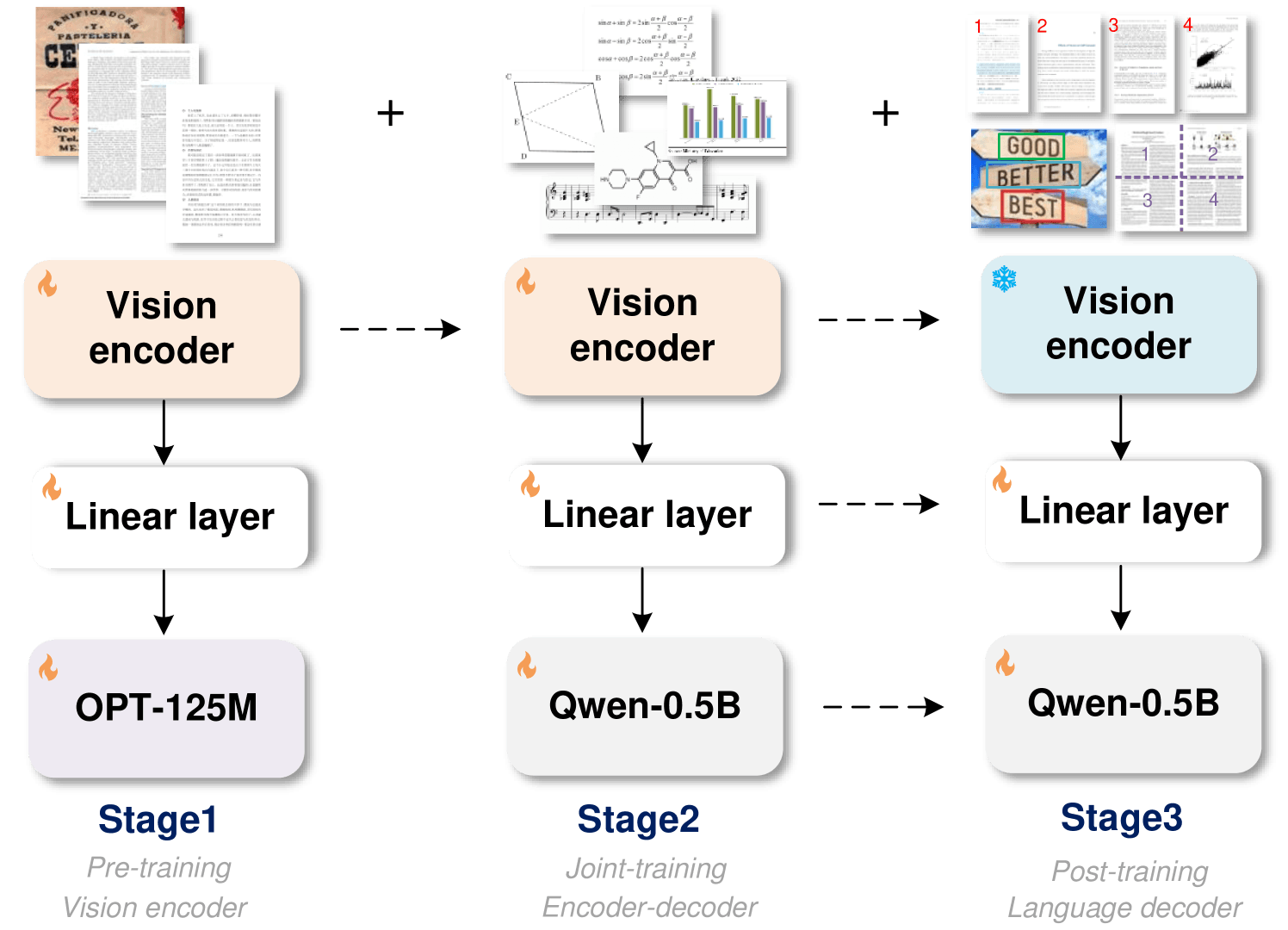

Researchers have created a new universal optical character recognition (OCR) model called GOT (General OCR Theory). Their paper introduces the concept of OCR 2.0, which aims to combine the strengths of traditional OCR systems and large language models.

According to the researchers, an OCR 2.0 model uses a unified end-to-end architecture and requires fewer resources than large language models, while being versatile enough to recognize more than just plain text.

GOT's architecture consists of an image encoder with approximately 80 million parameters and a speech decoder with 500 million parameters. The encoder compresses 1,024 x 1,024 pixel images into tokens, which the decoder then converts into text of up to 8,000 characters.

'OCR 2.0' unlocks automated processing of complex visual data in science, music, and analytics

The new model can recognize and convert various types of visual information into editable text. These include scene text and document text in English and Chinese, mathematical and chemical formulas, musical notes, simple geometric shapes, and diagrams with their components.

Ad

THE DECODER Newsletter

The most important AI news straight to your inbox.

✓ Weekly

✓ Free

✓ Cancel at any time

The diagram illustrates the three-stage architecture of the GOT (General OCR Theory) model, which combines traditional OCR systems with large language models. The researchers call this "OCR 2.0". | Image: Wei et al.

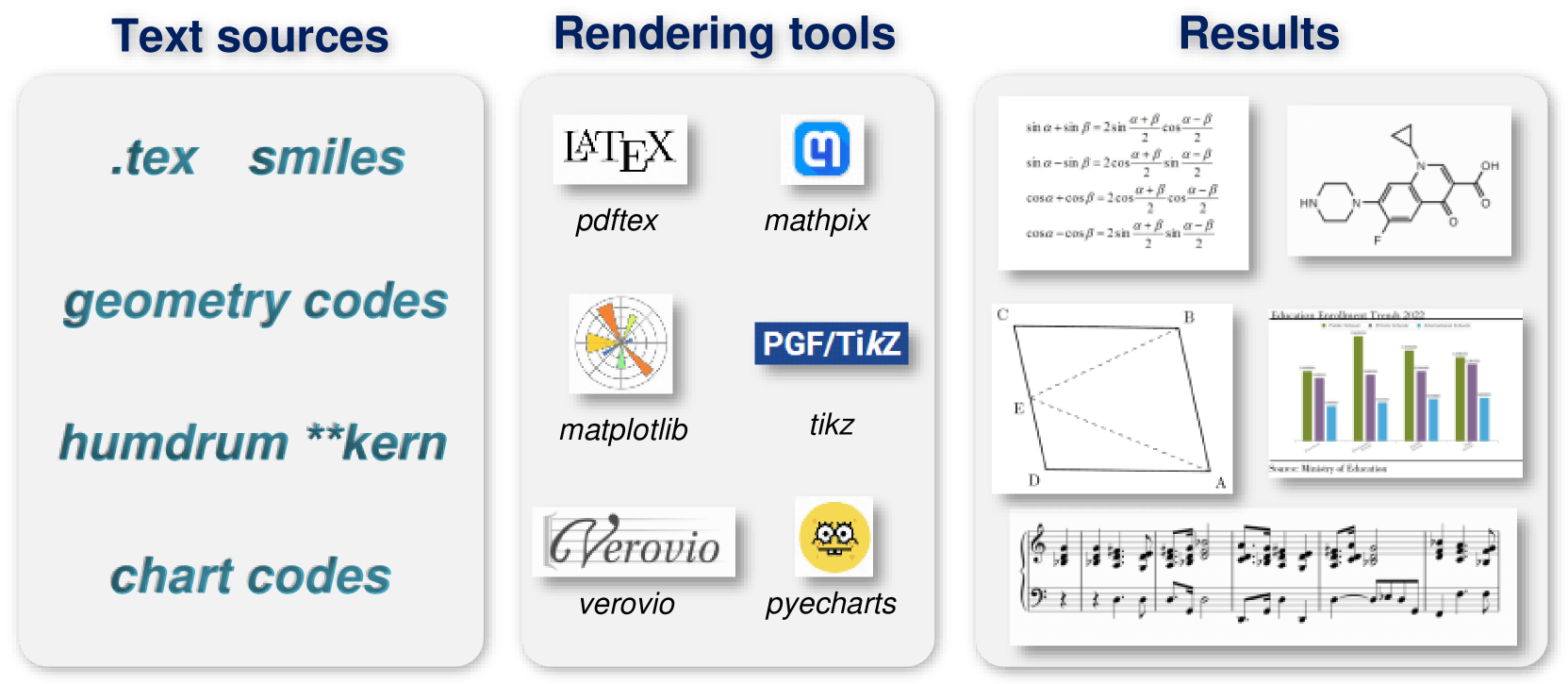

The diagram illustrates the three-stage architecture of the GOT (General OCR Theory) model, which combines traditional OCR systems with large language models. The researchers call this "OCR 2.0". | Image: Wei et al.To optimize training, the researchers first trained only the encoder on text recognition tasks. They then added Alibaba's Qwen-0.5B as a decoder and fine-tuned the entire model with diverse, synthetic data. The team used rendering tools such as LaTeX, Mathpix-markdown-it, TikZ, Verovio, Matplotlib, and Pyecharts to generate millions of image-text pairs for training.

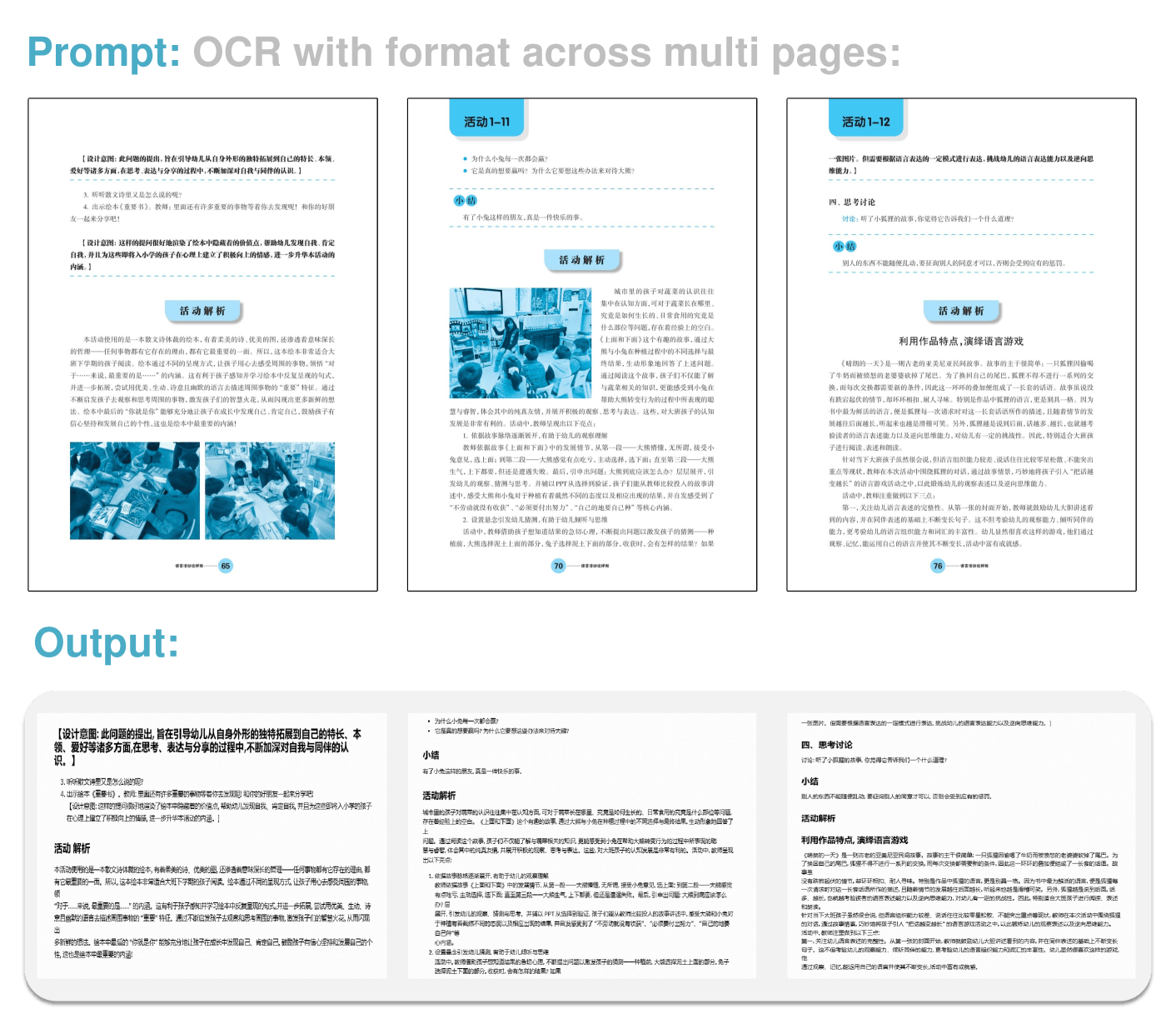

OCR 2.0 allows you to extract formatted text, headings, and even images from multiple pages and convert them into a structured digital form. | Image: Wei et al.

OCR 2.0 allows you to extract formatted text, headings, and even images from multiple pages and convert them into a structured digital form. | Image: Wei et al.The researchers report that GOT's modular design and synthetic data training allow for flexible expansion. New capabilities can be added without retraining the entire model. This approach allows for efficient updates and improvements to the system over time, they say.

This overview shows the workflow from text sources through rendering tools to visual results. It illustrates how various input formats such as .tex or SMILES codes can be transformed into complex mathematical formulas, chemical structures, geometric figures, and diagrams through specialized rendering tools. | Image: Wei et al.

This overview shows the workflow from text sources through rendering tools to visual results. It illustrates how various input formats such as .tex or SMILES codes can be transformed into complex mathematical formulas, chemical structures, geometric figures, and diagrams through specialized rendering tools. | Image: Wei et al.In experiments, GOT performed well across various OCR tasks. It achieved top scores in document and scene text recognition, even outperforming specialized models and large language models in diagram recognition.

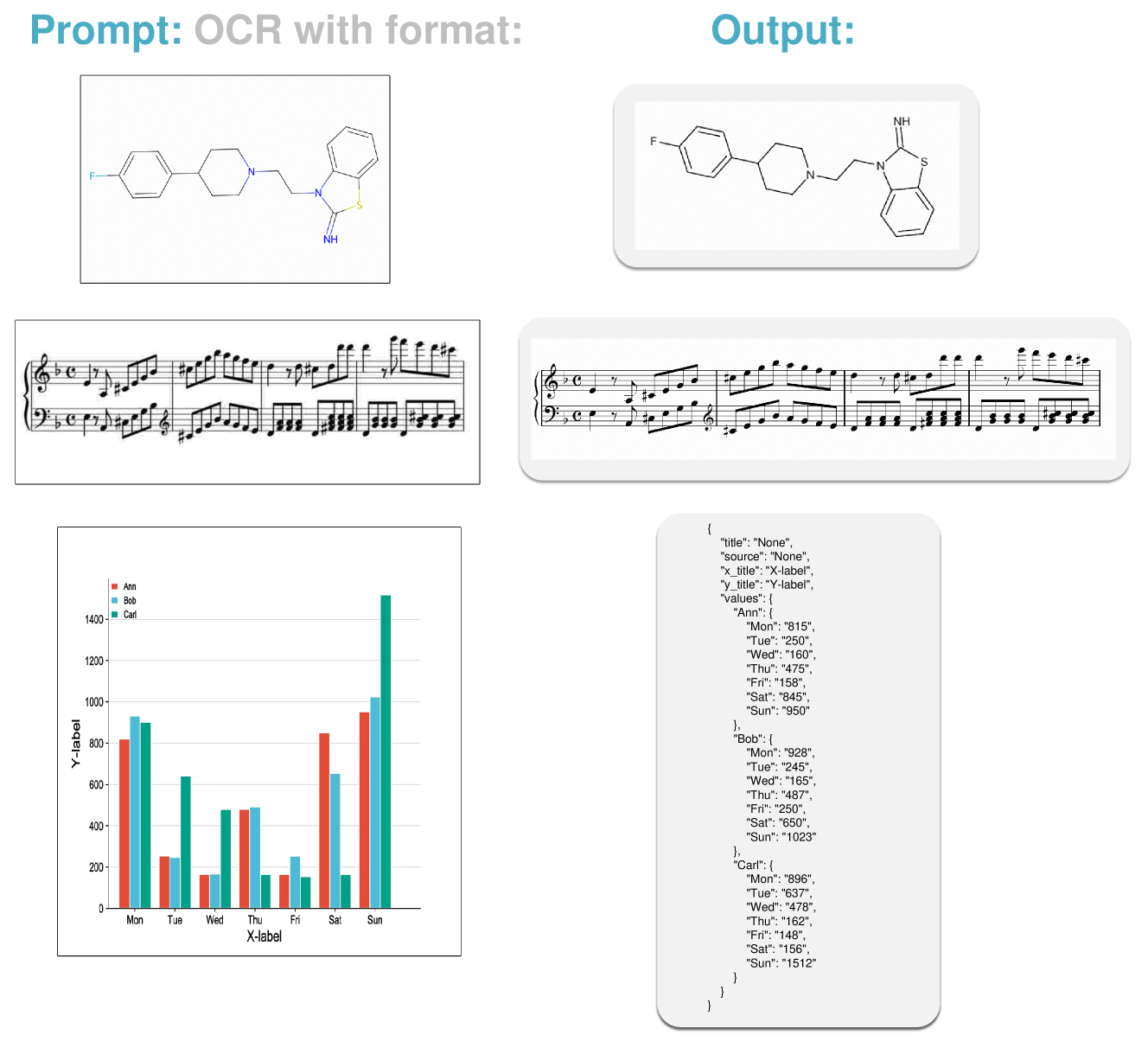

From complex chemical structural formulas to musical notation and data visualization: OCR 2.0 can accurately capture various formats and convert them into machine-readable formats. This opens up new possibilities for automated processing and analysis in science, music, and data analysis. | Image: Wei et al.

From complex chemical structural formulas to musical notation and data visualization: OCR 2.0 can accurately capture various formats and convert them into machine-readable formats. This opens up new possibilities for automated processing and analysis in science, music, and data analysis. | Image: Wei et al.The researchers have made a free demo and the code available on Hugging Face for others to use and build upon.

1 year ago

21

1 year ago

21