ARTICLE AD BOX

A new study from the University of Chicago finds major differences among commercial AI text detectors. While one tool performs nearly flawlessly, others fall short in key areas.

The researchers built a dataset of 1,992 human-written passages across six types: Amazon product reviews, blog posts, news articles, novel excerpts, restaurant reviews, and resumes. They used four leading language models—GPT-4 1, Claude Opus 4, Claude Sonnet 4, and Gemini 2.0 Flash—to generate AI-written samples in the same categories.

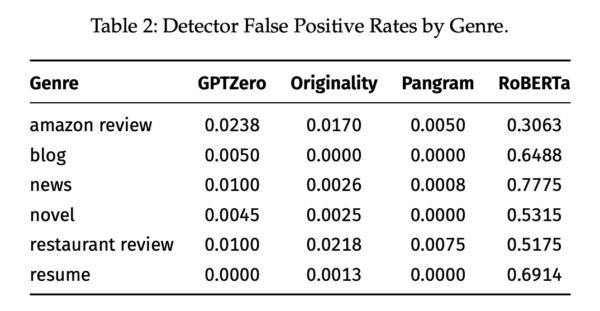

To compare the detectors, the research team tracked two main metrics. The False Positive Rate (FPR) measures how often human texts are incorrectly flagged as AI, and the False Negative Rate (FNR) shows how many AI texts go undetected.

Pangram sets the benchmark for AI text detection

In direct comparison, the commercial detector Pangram led the field. For medium and long passages, Pangram's FPR and FNR were almost zero. Even on short texts, error rates generally stayed below 0.01, except for Gemini 2.0 Flash restaurant reviews, where the FNR was 0.02.

Ad

THE DECODER Newsletter

The most important AI news straight to your inbox.

✓ Weekly

✓ Free

✓ Cancel at any time

OriginalityAI and GPTZero made up a second tier. Both worked well with longer texts, keeping FPRs at or below 0.01, but struggled with very short samples. They were also vulnerable to "humanizer" tools that disguise AI text as human-written.

Pangram is much less likely than competitors to misclassify human texts as AI-generated. | Image: Jabarian and Imas

Pangram is much less likely than competitors to misclassify human texts as AI-generated. | Image: Jabarian and ImasThe open-source RoBERTa-based detector performed the worst, mislabeling 30 to 69 percent of human texts as AI-generated.

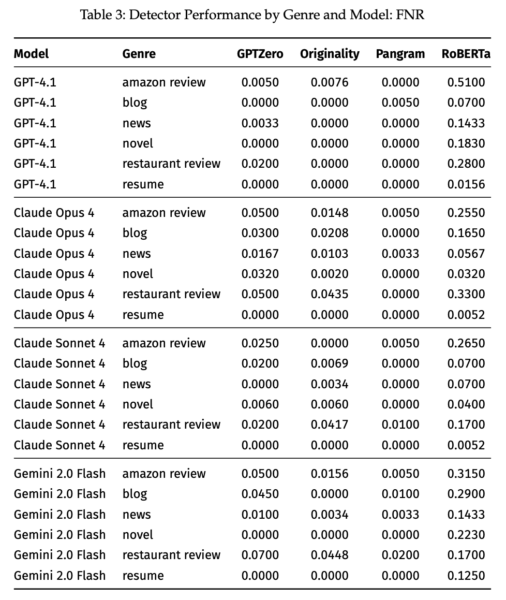

Detection accuracy depends on the AI model

Pangram accurately identified generated texts from all four models, with FNR never above 0.02. OriginalityAI's performance varied by model. It was better at catching Gemini 2.0 Flash outputs than those from Claude Opus 4. GPTZero was less affected by model choice but still lagged behind Pangram.

Pangram misses far fewer AI-generated texts than other detectors, regardless of model. | Image: Jabarian and Imas

Pangram misses far fewer AI-generated texts than other detectors, regardless of model. | Image: Jabarian and ImasLonger passages like novel excerpts and resumes were generally easier for all detectors to classify, while short reviews were harder. Pangram outperformed the rest, even for brief texts.

The researchers also tested each detector against StealthGPT, a tool that makes AI-generated text harder to detect. Pangram was mostly robust to these tricks, while other detectors struggled.

Recommendation

For texts under 50 words, Pangram was the most reliable. GPTZero had similar FPRs but higher overall error rates, and OriginalityAI often refused to process very short texts.

Pangram was also the most cost-effective, averaging $0.0228 per correctly identified AI text. This was about half the cost of OriginalityAI and a third that of GPTZero.

To address practical concerns where organizations may need stricter controls, the study introduces the concept of "policy caps." This framework allows users to set a maximum acceptable false positive rate, such as 0.5 percent, and calibrate detectors to meet that requirement.

When held to these stricter standards, Pangram was the only tool able to maintain high detection accuracy at a 0.5 percent FPR cap. Other detectors suffered significant drops in performance when required to minimize false positives.

Preparing for an AI detection arms race

The researchers warn that these results are only a snapshot, predicting an ongoing arms race between detectors, new AI models, and evasion tools. They recommend regular, transparent audits, similar to bank stress tests, to keep up.

The study also highlights the challenges of applying detection tools in real situations. While AI can help with brainstorming and editing, problems arise when it replaces original work in places where human input is needed, like schools or product reviews.

These findings stand out because previous research has often called AI detectors unreliable, especially in academic settings. OpenAI released its own detector, then withdrew it quickly due to poor accuracy.

A new, more powerful version from OpenAI is still missing. The researchers speculate that it is not in OpenAI's interest to make ChatGPT outputs easy to identify, since many users are students and a reliable detector could reduce usage in that group.

13 hours ago

1

13 hours ago

1