ARTICLE AD BOX

Chinese researchers have discovered why AI models often struggle with complex reasoning tasks: They tend to drop promising solutions too quickly, leading to wasted computing power and lower accuracy.

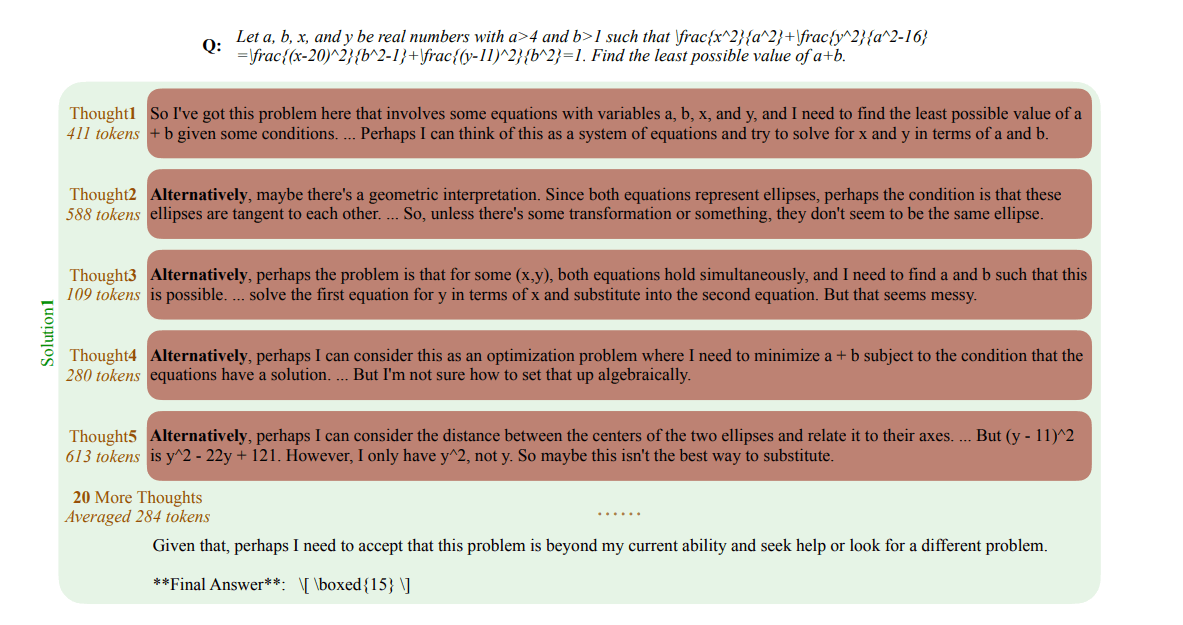

Researchers from Tencent AI Lab, Soochow University, and Shanghai Jiao Tong University show that reasoning models like OpenAI's o1 frequently jump between different problem-solving approaches, often starting fresh with phrases like "Alternatively…" This behavior becomes more noticeable as tasks get harder, with models using more computing power when they arrive at wrong answers.

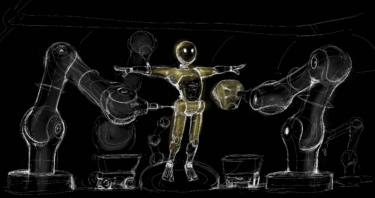

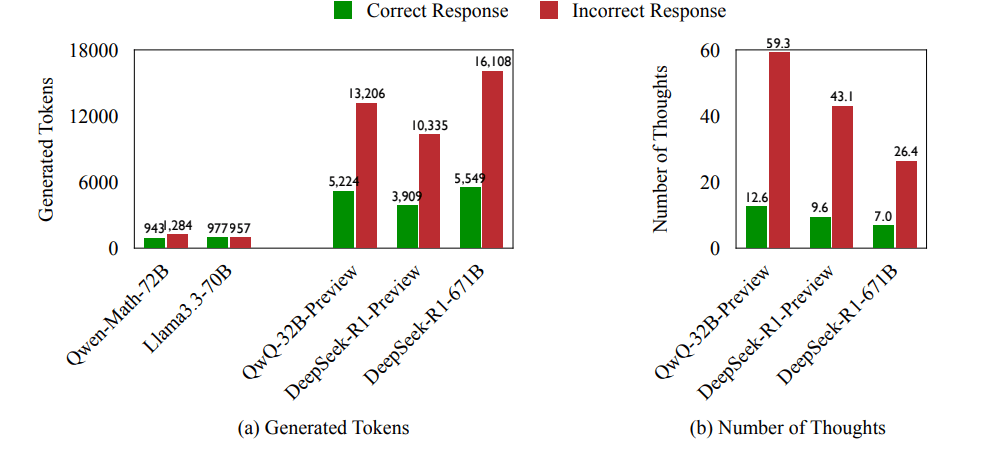

The QwQ-32B-Preview model tries 25 different solution approaches during a single task. Frequent strategy changes lead to inefficient use of resources. | Image: Wang et al.

The QwQ-32B-Preview model tries 25 different solution approaches during a single task. Frequent strategy changes lead to inefficient use of resources. | Image: Wang et al.The team found that 70 percent of incorrect answers contained at least one valid line of reasoning that wasn't fully explored. When models gave wrong answers, they used 225 percent more computing tokens and changed strategies 418 percent more often compared to correct solutions.

The number of tokens generated and the number of "thoughts" (solution approaches) for different models. On average, o1-like LLMs use 225 percent more tokens for incorrect answers than for correct ones, which is due to 418 percent more frequent thought changes. | Image: Wang et al.

The number of tokens generated and the number of "thoughts" (solution approaches) for different models. On average, o1-like LLMs use 225 percent more tokens for incorrect answers than for correct ones, which is due to 418 percent more frequent thought changes. | Image: Wang et al.To track this problem, the researchers created a metric that measures how efficiently models use their computing tokens when they get answers wrong. Specifically, they looked at how many tokens actually contribute to finding the right solution before the model switches to a different approach.

Ad

THE DECODER Newsletter

The most important AI news straight to your inbox.

✓ Weekly

✓ Free

✓ Cancel at any time

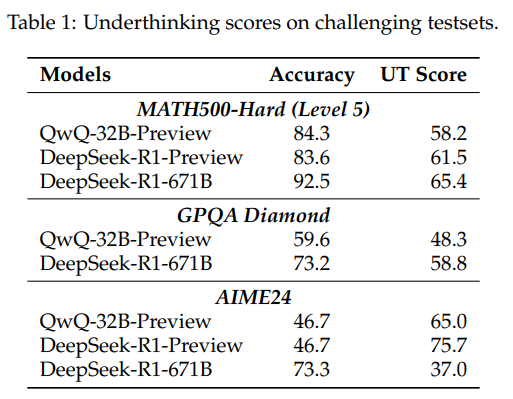

The team tested this using three challenging sets of problems: math competition questions, college physics problems, and chemistry tasks. They wanted to see how models like QwQ-32B-Preview and Deepseek-R1-671B handle complex reasoning. The results showed that o1-style models often waste tokens by jumping between different approaches too quickly. Surprisingly, models that get more answers right don't necessarily use their tokens more efficiently.

Underthinking scores (UT) of different models in logic tasks. The UT score measures the frequency of strategy changes during the reasoning process. | Image: Wang et al.

Underthinking scores (UT) of different models in logic tasks. The UT score measures the frequency of strategy changes during the reasoning process. | Image: Wang et al.Making models stick to their ideas

To address underthinking, the research team developed what they call a "thought switching penalty" (TIP). It works by adjusting the probability scores for certain tokens - the building blocks models use to form responses.

When the model considers using words that signal a strategy change, like "Alternatively", TIP punishes these choices by reducing their likelihood. This pushes the model to explore its current line of reasoning more thoroughly before jumping to a different approach.

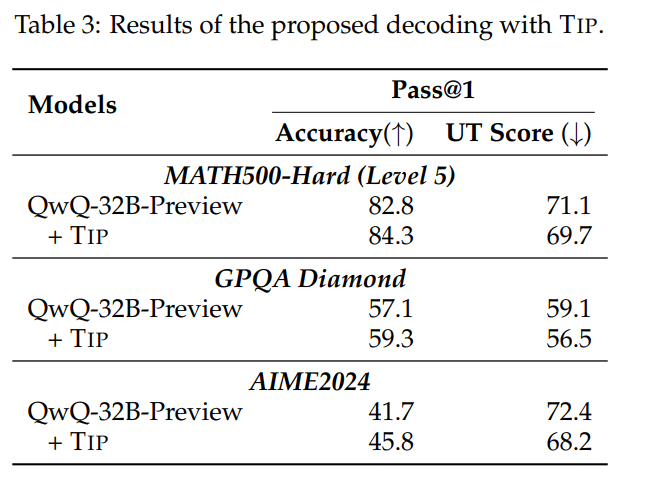

When using TIP, the QwQ-32B-Preview model solved more MATH500-Hard problems correctly - improving from 82.8 to 84.3 percent accuracy - and showed more consistent reasoning. The team saw similar improvements when they tried it on other tough problem sets like GPQA Diamond and AIME2024.

The table shows the results of the proposed decoding with "Thought Switching Penalty". The TIP method can increase the accuracy and decrease the UT score, indicating a slight improvement in the efficiency and stability of the reasoning process. | Image: Wang et al.

The table shows the results of the proposed decoding with "Thought Switching Penalty". The TIP method can increase the accuracy and decrease the UT score, indicating a slight improvement in the efficiency and stability of the reasoning process. | Image: Wang et al.These results point to something interesting: getting AI to reason well isn't just about having more computing power. Models also need to learn when to stick with a promising idea. Looking ahead, the research team wants to find ways for models to manage their own problem-solving approach better - knowing when to keep going with an idea and when it's actually time to try something new.

Recommendation

8 months ago

19

8 months ago

19