ARTICLE AD BOX

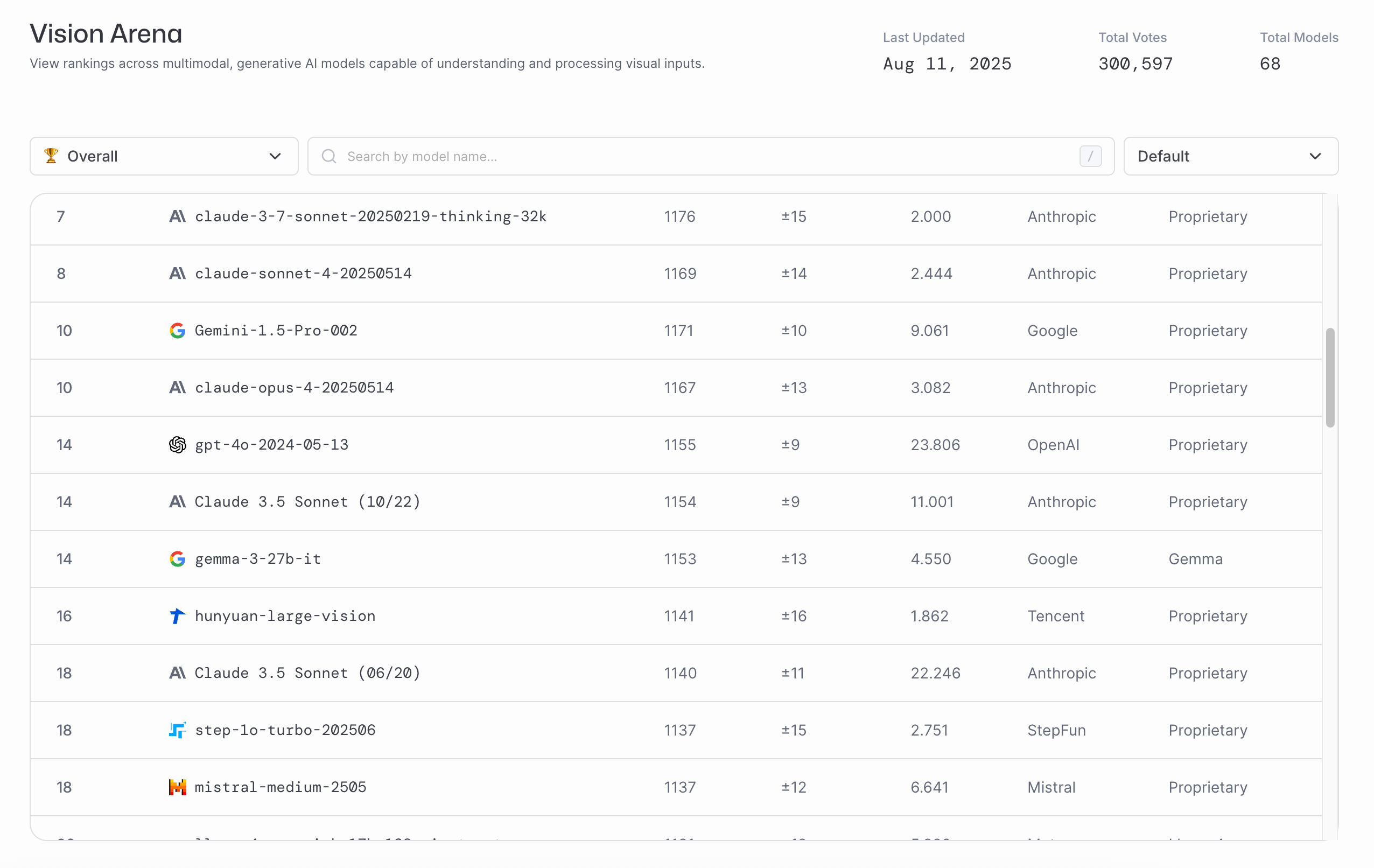

Tencent's new Hunyuan-Large-Vision model now leads all Chinese entries on the LMArena Vision Leaderboard, ranking just behind GPT-5 and Gemini 2.5 Pro.

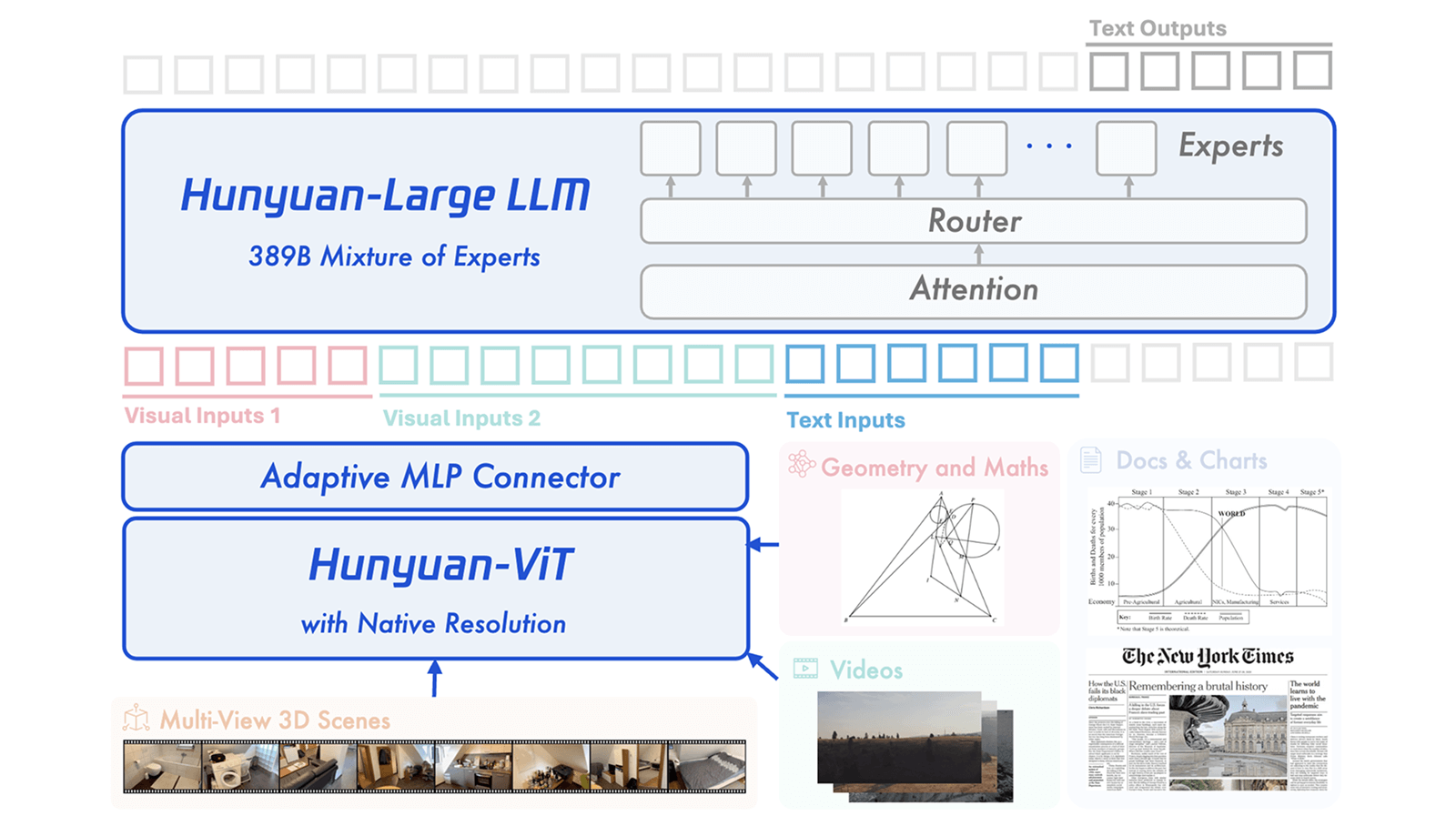

Built on a mixture-of-experts architecture with 389 billion parameters (52 billion active), it delivers performance comparable to Claude Sonnet 3.5.

The LMArena Vision Leaderboard ranks AI image models by community preference in head-to-head comparisons. | Image: LMArena Leaderboard/Screenshot by THE DECODER

The LMArena Vision Leaderboard ranks AI image models by community preference in head-to-head comparisons. | Image: LMArena Leaderboard/Screenshot by THE DECODERAmong Chinese entries, Hunyuan-Large-Vision leads the pack, overtaking the previously top-rated Qwen2.5-VL in its largest version. Tencent says the model scored an average of 79.5 on the OpenCompass Academic Benchmark and stands out on multilingual tasks.

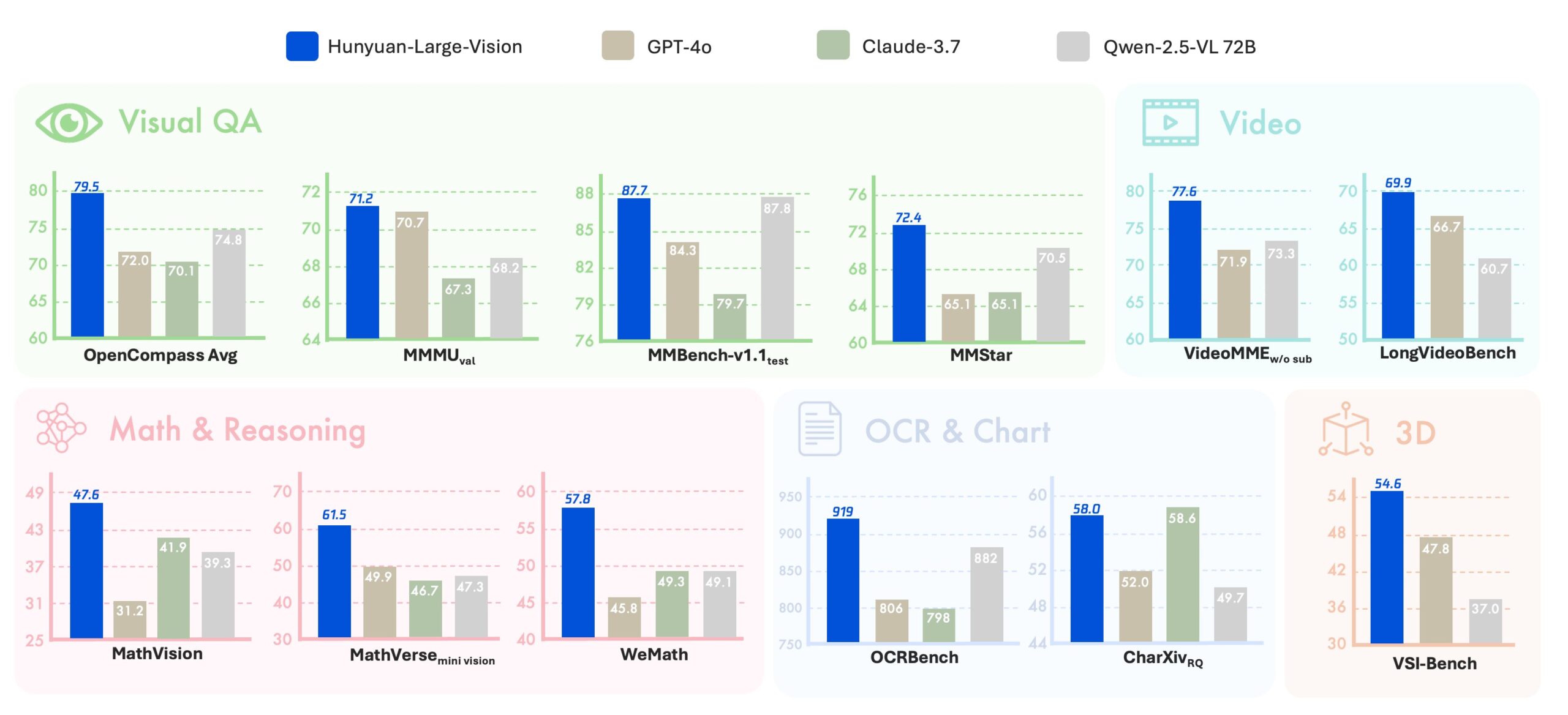

Hunyuan-Large-Vision leads nearly all visual QA, video, math, OCR, and 3D benchmarks, though the Western models in the comparison are not the newest releases. | Image: Tencent

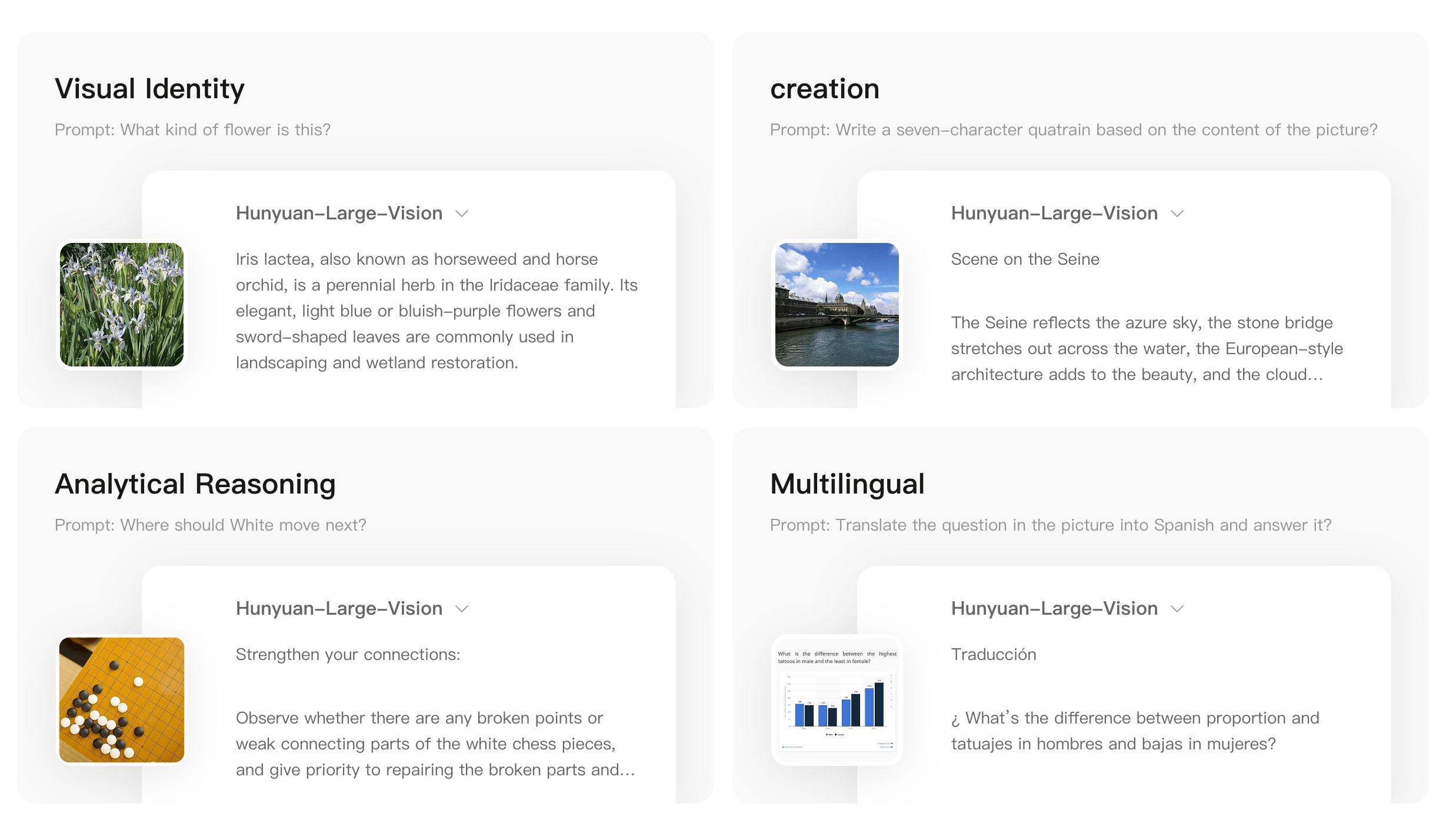

Hunyuan-Large-Vision leads nearly all visual QA, video, math, OCR, and 3D benchmarks, though the Western models in the comparison are not the newest releases. | Image: TencentTencent demonstrated the model's capabilities with a range of tasks: identifying Iris lactea, composing a poem from a photo of the Seine, offering strategic advice in Go, and translating questions into Spanish. Compared to Tencent's earlier vision models, Hunyuan-Large-Vision also handles less common languages more effectively.

Ad

THE DECODER Newsletter

The most important AI news straight to your inbox.

✓ Weekly

✓ Free

✓ Cancel at any time

Hunyuan-Large-Vision handles everything from species recognition to poetry, translation, and board games. | Image: Tencent

Hunyuan-Large-Vision handles everything from species recognition to poetry, translation, and board games. | Image: TencentHunyuan-Large-Vision is built around three main modules: a custom vision transformer with one billion parameters for image processing, a connector module to bridge vision and language, and a language model using the mixture-of-experts technique.

Tencent says the vision transformer was first trained to link images and text, then further refined with over a trillion multimodal text samples. In benchmarks, it outperforms other popular models on complex multimodal tasks.

The model processes images, video, and 3D content, and, according to Tencent, excels at visual reasoning, video analysis, and spatial understanding. | Image: Tencent

The model processes images, video, and 3D content, and, according to Tencent, excels at visual reasoning, video analysis, and spatial understanding. | Image: TencentNew training pipeline for multimodal data

Tencent built a pipeline that transforms noisy raw data into high-quality instruction data using pre-trained AI and specialized tools—resulting in over 400 billion multimodal text samples across visual recognition, math, science, and OCR.

The model was fine-tuned with Rejection Sampling, which generates multiple responses and keeps only the best, while automated tools filter out errors and redundancies. More efficient reasoning was achieved by distilling complex answers into concise ones.

Training used Tencent's Angel-PTM framework and a multi-level load balancing strategy, which cut GPU bottlenecks by 18.8 percent and sped up the process.

Recommendation

Hunyuan-Large-Vision is available exclusively via API on Tencent Cloud. Unlike some previous Tencent models, this one is not open source. With its 389 billion parameters, it would not be practical to run on consumer hardware anyway.

2 months ago

11

2 months ago

11