ARTICLE AD BOX

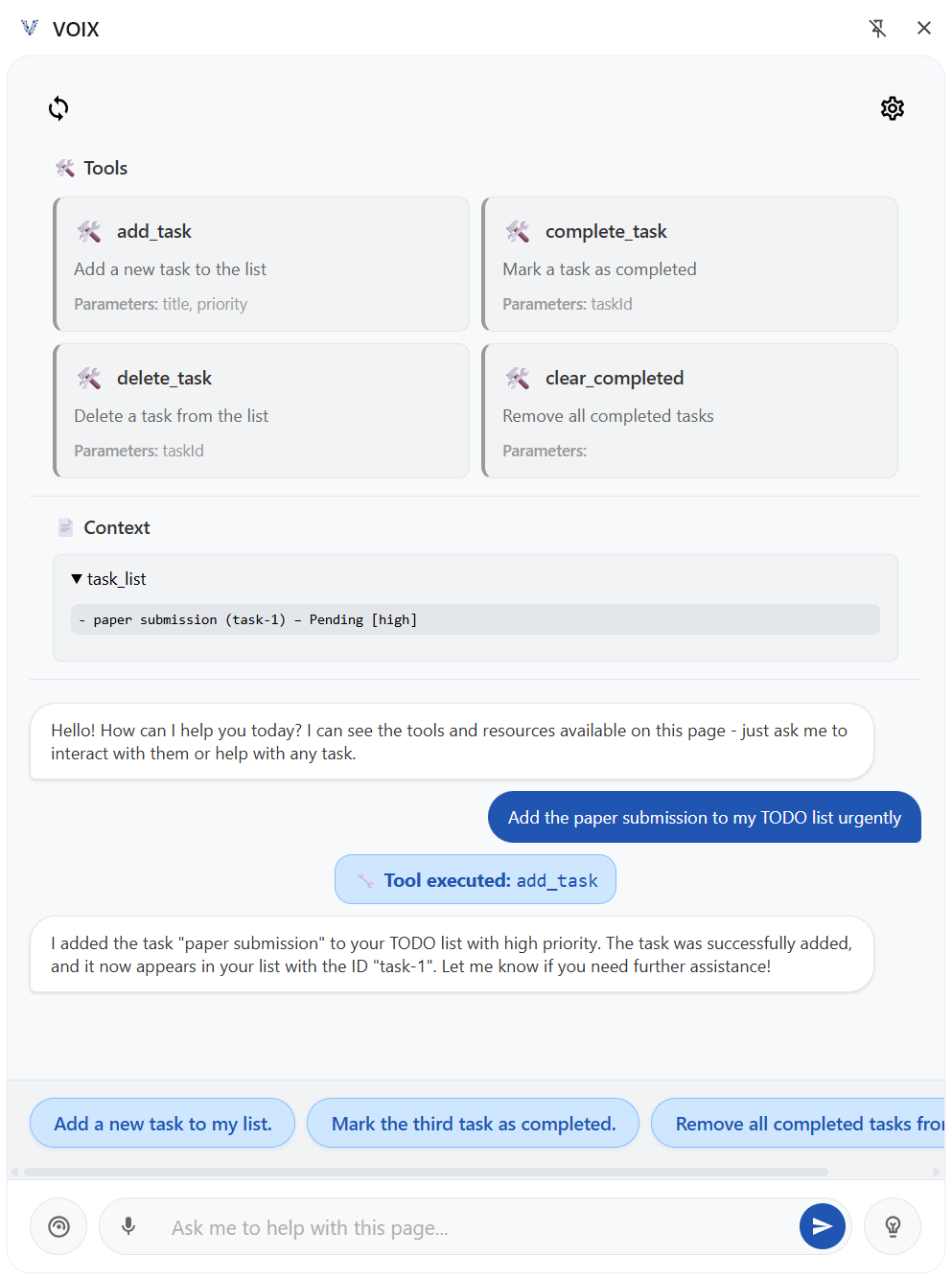

Researchers at TU Darmstadt have introduced the VOIX framework, giving websites two new HTML elements so AI agents can recognize available actions without having to visually interpret complex user interfaces.

The <tool> element lists actions by name, parameters, and description. The <context> element gives the agent current information about the application's state.

For example, a to-do list might include a <tool name="add_task"> element. It defines parameters like "title" and "priority" and connects to the app's logic through JavaScript. When an agent adds a task, it uses this tool directly rather than searching for input fields and buttons.

The VOIX Chrome extension displays a sidebar with tool cards and a task list, letting an LLM agent manage tasks through chat. | Image: Schultze et al.

The VOIX Chrome extension displays a sidebar with tool cards and a task list, letting an LLM agent manage tasks through chat. | Image: Schultze et al.Structure gives agents more control

The framework divides responsibilities: the website declares its functions, a browser agent mediates between the site and the AI, and the inference provider decides what to do using this structured data.

Ad

THE DECODER Newsletter

The most important AI news straight to your inbox.

✓ Weekly

✓ Free

✓ Cancel at any time

This setup is a major shift from current agents, which try to "see" websites as humans do—a process that's unreliable and prone to attacks.

"Agents must infer affordances from human-oriented user interfaces, leading to brittle, inefficient, and insecure interactions," the researchers say. The architecture also aims to improve privacy. The browser agent sends user conversations directly to the LLM provider, keeping the website out of the loop. Agents only see data that has been explicitly released, not the whole page. VOIX runs on the client side, so site owners don't have to pay for LLM inference.

To test VOIX, the team ran a three-day hackathon with 16 developers. Six teams built different apps using the framework, most with no prior experience. Results show strong usability: the System Usability Scale score reached 72.34, above the industry average of 68. Developers also rated system understanding and performance highly.

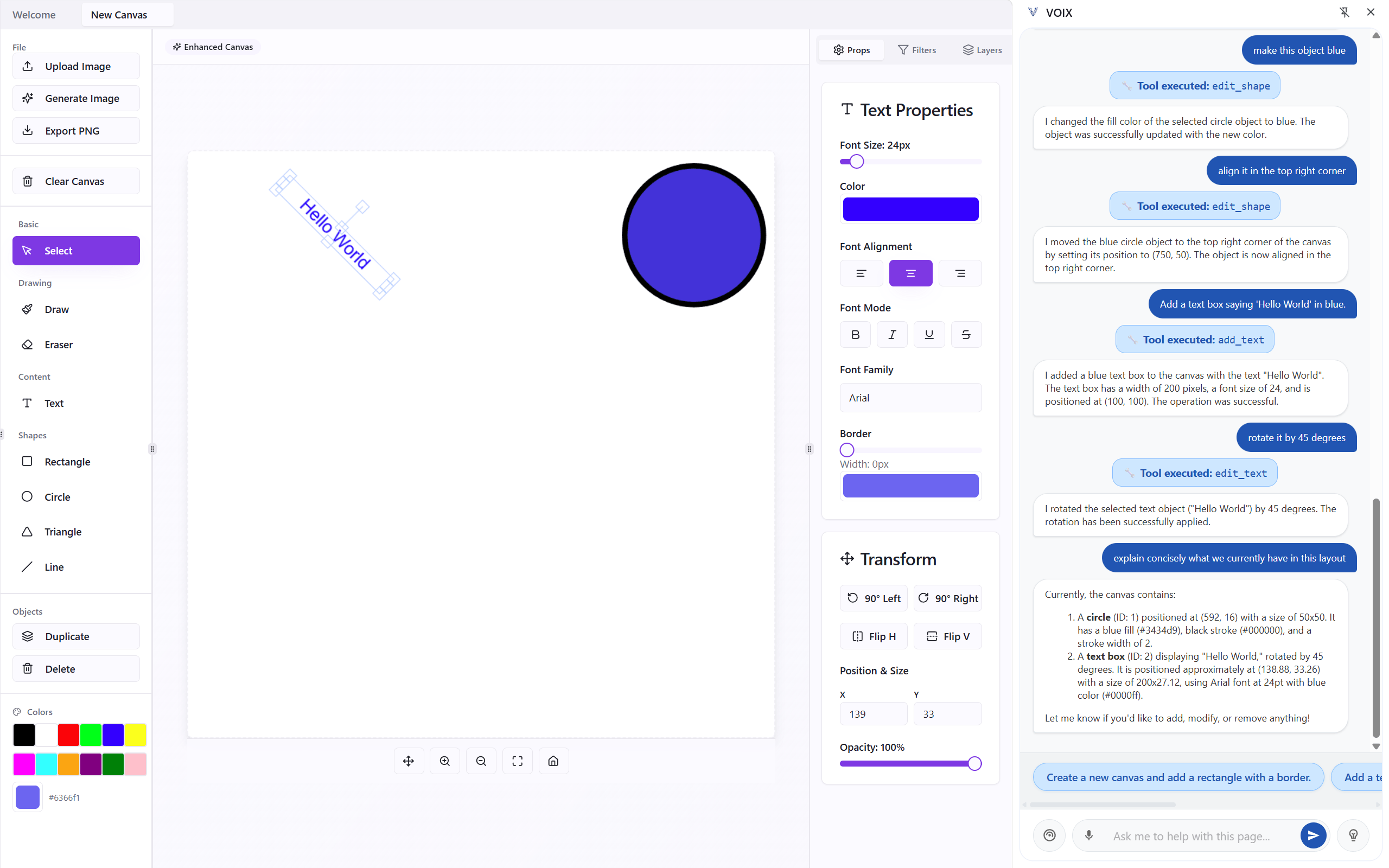

In this demo, VOIX applies user commands contextually—changing color, position, and rotating text—all by prompt. | Image: Schultze et al.

In this demo, VOIX applies user commands contextually—changing color, position, and rotating text—all by prompt. | Image: Schultze et al.The apps built during the hackathon show VOIX's flexibility. One demo let users do basic graphic design, clicking objects and giving voice commands like "rotate this by 45 degrees." A fitness app created full workout plans from prompts like "create a full week high-intensity training plan for my back and shoulders." Other projects included a soundscape creator that changes audio environments based on commands like "make it sound like a rainforest," and a Kanban tool that generates tasks from prompts.

Big speed boost for AI web agents

Latency benchmarks show VOIX is significantly faster than traditional agents. VOIX completed tasks in just 0.91 to 14.38 seconds, compared to 4.25 seconds to over 21 minutes for standard AI browser agents.

Recommendation

Take rotating a green triangle 90 degrees: VOIX did it in a single second, while Perplexity Comet needed ninety seconds for the same action. Vision-based agents waste time parsing screenshots, guessing the right action, and verifying results. Some complex tasks failed altogether with these tools.

Still, the researchers highlight a few hurdles for real-world use. In large or legacy codebases, VOIX declarations can fall out of sync with the user interface. Developers also need to rethink their approach—defining specific agent actions and deciding which tools to make available. Finding the right balance between basic functions and more complex, intent-based commands remains a challenge.

As a reference, the researchers built a Chrome extension with chat and voice support that works with any OpenAI-compatible API. The framework runs with both cloud-based and local LLMs, and was tested with Qwen3-235B-A22B.

Pushing toward new AI web standards

Companies like OpenAI and Perplexity imagine a future where chatbots are the main gateway to the web, with AI browsers such as Atlas and Comet handling everything from booking travel to online shopping, no custom APIs required.

In reality, today's language models still struggle with the complexity of modern websites, and prompt injection is a persistent threat. Making agent-driven web browsing practical may require new standards that present site information in ways LLMs can reliably understand. Initiatives like llms.txt and the rise of MCP servers suggest the industry is ready for change. VOIX wants to be part of this next wave of web standards.

1 hour ago

1

1 hour ago

1