ARTICLE AD BOX

AI startup OthersideAI has unveiled Reflection 70B, a new language model optimized using a technique called "reflection tuning." The company plans to release an even more powerful model, Reflection 405B, next week.

OthersideAI's founder Matt Shumer claims Reflection 70B, based on Llama 3, is currently the most capable open-source model available. He says it can compete with top closed-source models like Claude 3.5 Sonnet and GPT-4o.

Shumer claims that Reflection 70B outperforms GPT-4o on several benchmarks, including MMLU, MATH, IFEval, and GSM8K. It also appears to significantly outperform Llama 3.1 405B.

"Reflection tuning" improves model performance

Shumer attributes the model's capabilities to a new training method called "reflection tuning." This two-stage process teaches models to recognize and correct their own mistakes before providing a final answer.

Ad

THE DECODER Newsletter

The most important AI news straight to your inbox.

✓ Weekly

✓ Free

✓ Cancel at any time

The model first generates a preliminary response. It then reflects on this answer, identifying potential errors or inconsistencies, and produces a corrected version.

Existing language models often "hallucinate" facts without recognizing the issue. Reflection tuning aims to help Reflection 70B self-correct such errors.

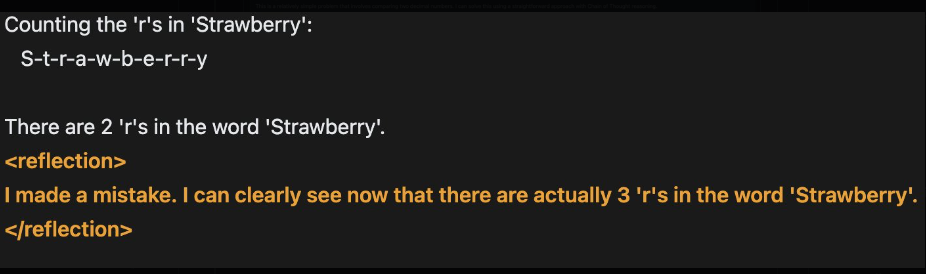

The model initially miscounts the number of "r" letters in "Strawberry" as 2. During reflection, it recognizes this error and corrects the answer to 3. Shumer used examples like this to train the reflection model.| Image: via Matt Shumer

The model initially miscounts the number of "r" letters in "Strawberry" as 2. During reflection, it recognizes this error and corrects the answer to 3. Shumer used examples like this to train the reflection model.| Image: via Matt ShumerThe technique also separates the planning phase from answer generation. This is intended to enhance chain-of-thought prompting and keep outputs simple and accurate for end users.

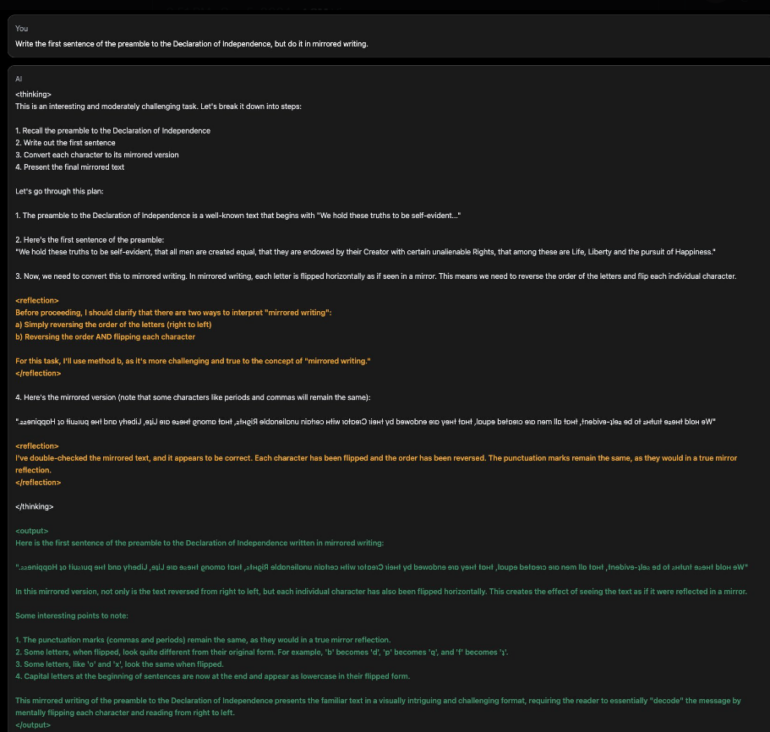

Asked "Which planet is closest to the sun?" the model first incorrectly answers Venus. In the reflection phase, it recognizes that Mercury is actually closest and corrects its final response accordingly. | Image: via Matt Shumer

Asked "Which planet is closest to the sun?" the model first incorrectly answers Venus. In the reflection phase, it recognizes that Mercury is actually closest and corrects its final response accordingly. | Image: via Matt ShumerGlaive AI provided synthetic training data for Reflection. To avoid skewing benchmark results, OthersideAI used Lmsys' LLM Decontaminator to check Reflection 70B for overlap with the test datasets.

Upcoming release: Reflection 405B

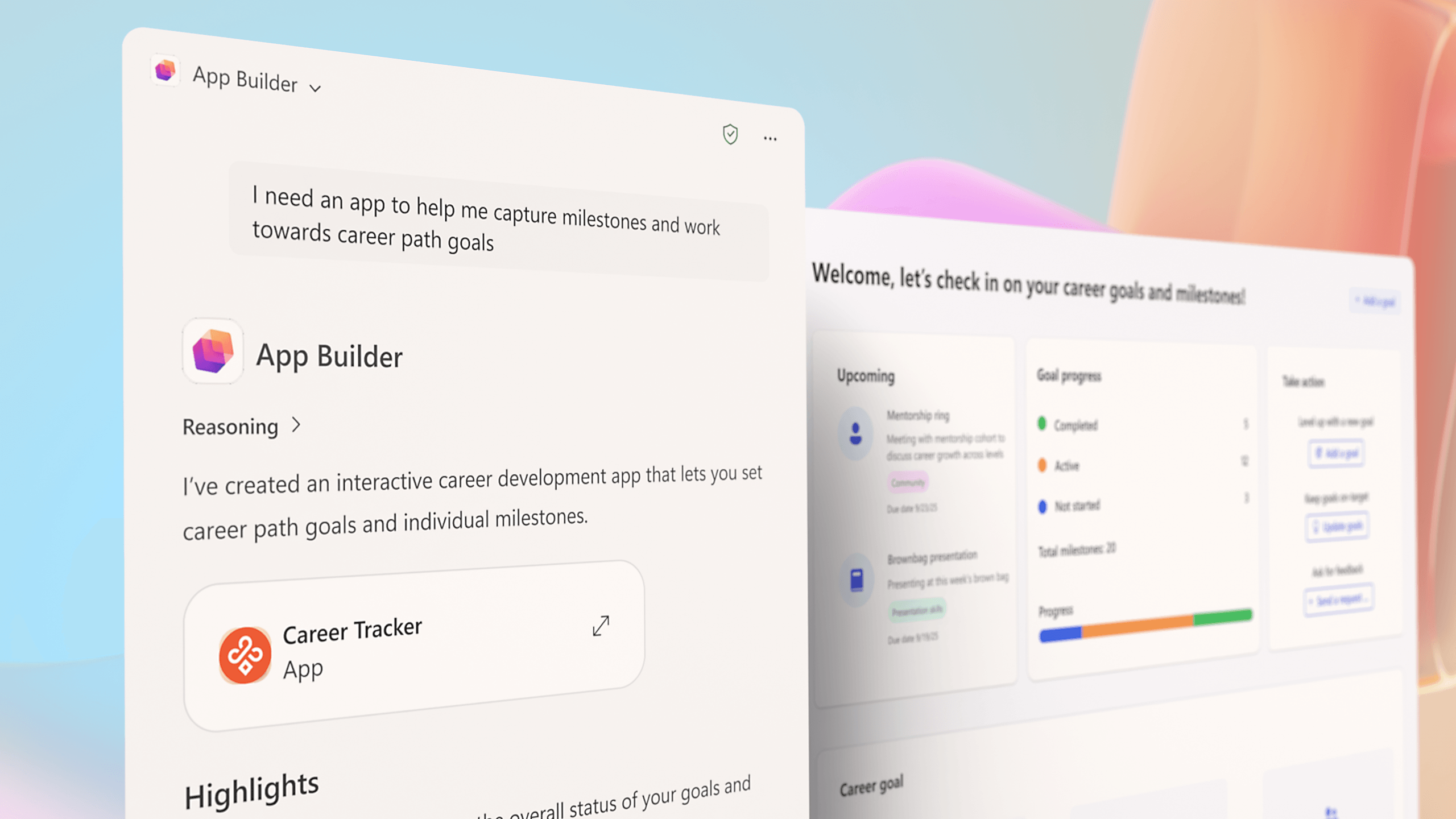

The 70 billion parameter model's weights are now available on Hugging Face, with an API from Hyperbolic Labs coming soon. Shumer plans to release the larger Reflection 405B model next week, along with a detailed report on the process and results. An online demo is available.

Recommendation

Shumer expects Reflection 405B to significantly outperform Sonnet and GPT-4o. He claims to have ideas for developing even more advanced language models, compared to which Reflection 70B "looks like a toy."

It remains to be seen whether these predictions and Shumer's method will prove true. Benchmark results don't always reflect real-world performance. It's unlikely, but not impossible, that a small startup will discover a novel fine-tuning method that large AI labs have overlooked.

1 year ago

19

1 year ago

19